Our Workslop Era: The Slopification of Work, and How to Stop It

Cognitive offloading, collapsing standards, and how GenAI is undermining the workplace

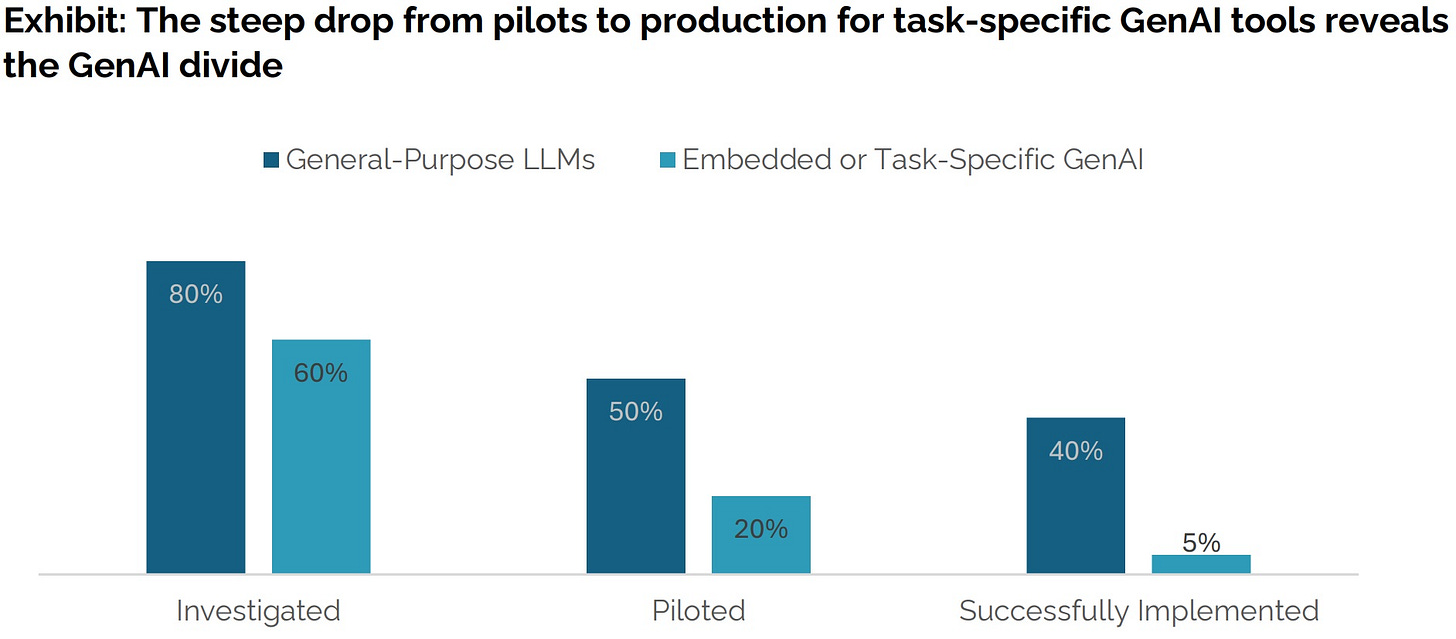

The GenAI divide is real. Employee AI usage has doubled since 2023 (from ~21% to 40% according to Gallup). But the most recent studies coming out of MIT and BetterUp are clear; so far, companies heavily investing in fully AI-led processes are seeing no discernible ROI.

This is in stark contrast to the endless news and enthusiasm we’ve been inundated with online.

The question is, why? What’s going on? Is GenAI always going to be a net-negative for businesses, or are we just in an awkward growth phase of this technology?

We’re going to unpack what’s going on here. The problem, how we got here, and how companies can train and prepare for the future.

The Problem with Workslop

First off, what is workslop?

Workslop is defined as AI-generated work content that masquerades as good work, but lacks the substance to meaningfully advance a given task.

For anyone that’s been working lately, this is not a new concept and shouldn’t come as a surprise. You might even have a colleague (or two) that sends you almost exclusively slop. You probably roll your eyes when you read it, too. From obviously AI-generated Slack responses to horribly written code, AI-generated content that is shallow in depth has exploded all over the internet.

Real humans are spending their time reading this stuff, and it’s often just good enough to slip by unnoticed. But there are numerous problems with this overload of shallow content, from the cognitive demands it imposes on humans to the maintenance and work required to fix erroneous outputs. GenAI usage has, across the board, resulted in quantity over quality. And we’re collectively realizing that this is a step in the wrong direction.

I’ve written about this before, but it bears repeating: using GenAI for cognitive offloading is bad for our brains. Critical thinking skills are like any other muscle, and if you offload the thinking to a model you inevitably result in having a shallower understanding of both the task you offloaded and the subject matter of that task. Of course, this may be desirable for some types of tedious work, but there is a distinct difference between automation and GenAI cognitive offloading. Namely, that automation can result in faster workflows that free up your time to think critically about what it is that you’re trying to do.

GenAI, when used carelessly, can result in cognitive automation, which is the opposite of what you want if you’re running a business. After all, having a team of people you’re paying who have a shallow idea of what is even going on sounds like a nightmare, right?

Cognitive automation can result in what I call synthetic authorship: a person that uses AI to create their thinking, writing, and ideas, then pretends it is entirely their own human intellectual output. I wrote about this at length back in April. This problem will get worse before it gets better.

There’s a laundry list of problems with slop, and I’ve tried to summarize as concisely as possible all of the issues with it, based on reports and testimonies online:

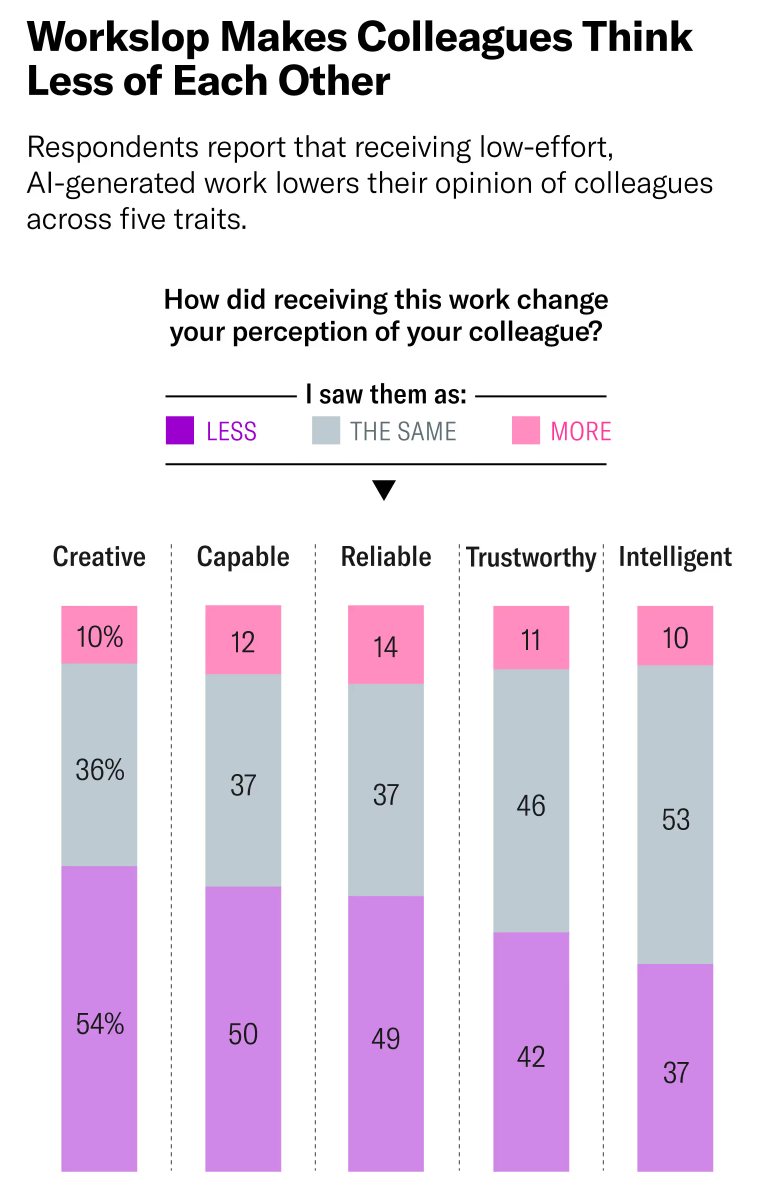

Workslop erodes trust and collaboration, making colleagues appear less capable and discouraging future teamwork.

It imposes a hidden productivity tax, forcing recipients to spend hours redoing or clarifying low-quality outputs.

It normalizes mediocrity, lowering organizational standards and rewarding shortcuts over genuine excellence.

It fails in critical contexts, producing content that looks polished but lacks accuracy, context, or reliability.

It reinforces inequities, with AI use triggering competence penalties and harsher judgment for some groups.

It undermines morale and culture, leaving employees frustrated, cynical, and disengaged.

It wastes leadership investment, with most AI pilots showing little ROI while deepening dependence on flawed outputs.

The erosion of trust is a huge concern. Receiving workslop results in perception changes across the board. HBR’s recent survey indicated that up to 15% of all content received at work for U.S. based full-time employees qualifies as workslop, with 40% of employees having received workslop in some form in the last month.

This reminds me of the now commonplace assumption that distracted driving is as bad, or worse, than drunk driving. Each problem is bad enough on its own, but the combination of all of these results in a new type of workplace nightmare. Trust falls, time is lost, and quality dips. Couple that with the weekly news pronouncing the end of white-collar work, and you’ve got a recipe for a jaded workplace. Surely there’s an upside?

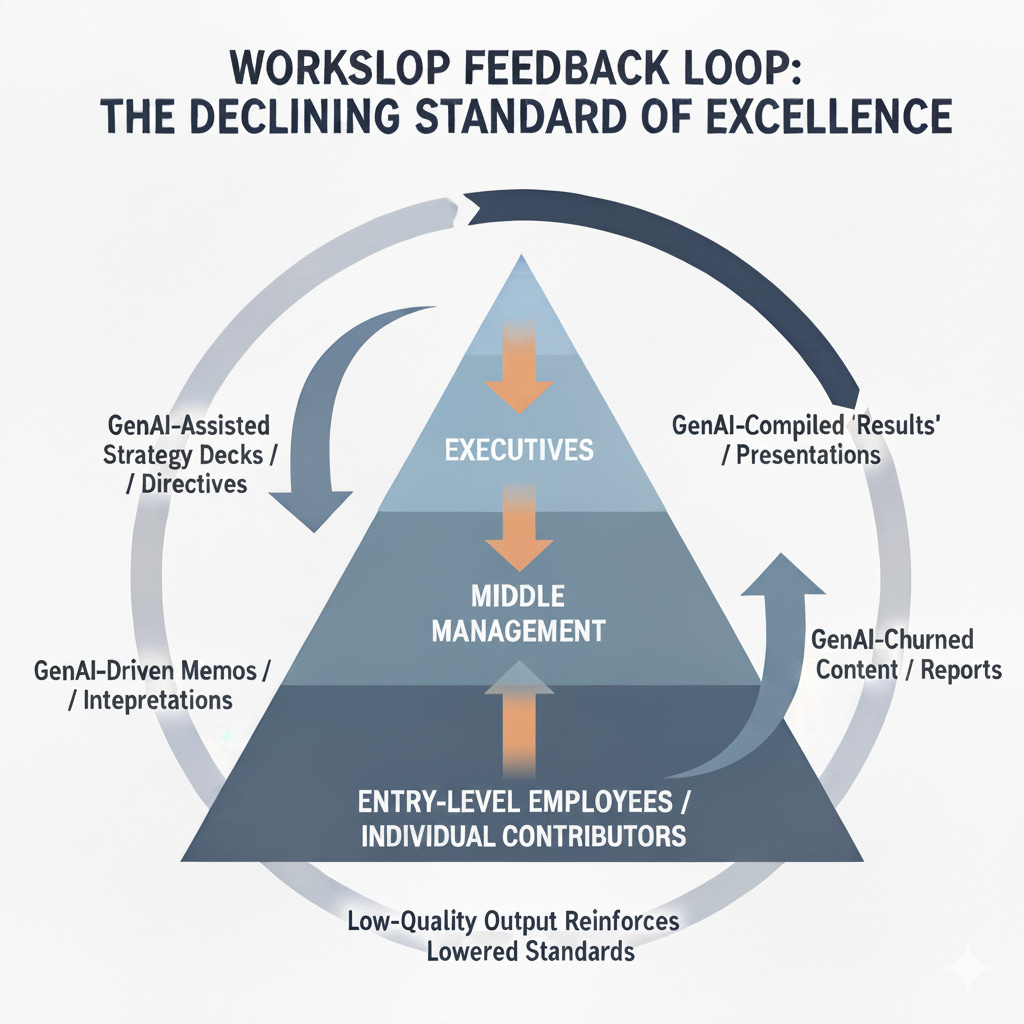

The Workslop Feedback Loop

We’re seeing what I’m calling the Workslop Feedback Loop™. Slop can flow from the top down at an organization, or from the bottom up. Increasingly, it is happening in both directions. Everything turns to mush.

Let’s visualize and walk through how this happens.

Executives → They sit at the top of every org. They start using GenAI to push polished, but oftentimes shallow or empty strategy decks and directives down to middle management.

Middle Management → Recognizes that the directives from the top are vague or ill-thought-out, but ends up reinterpreting the directives and adds a layer of complexity to them (using GenAI) before sending them down to individual contributors and lower level staff.

Individual Contributors and Entry Level Employees → Receive directives from middle management that are digestible, but often vague or lacking in substance. Consciously or not, they notice the dip in quality, and begin to use GenAI to churn out content, reports, code, and presentations.

Ultimately, work travels back from ICs to managers, who may or may not notice the slop. Sometimes the slop goes undetected, and gets incorporated into their reports that are sent upstream to executives. Executives, being relatively out of the loop when it comes to more granular work, are often used to things being watered down. They either notice the dip in quality, or don’t. ICs and management can fear backlash for calling out poor quality GenAI directives, and the cycle continues.

Companies and organizations started trying out AI with earnest intentions. Language models are truly incredible, and there are endless tools being released each week that are fascinating. The intent and desire here makes sense.

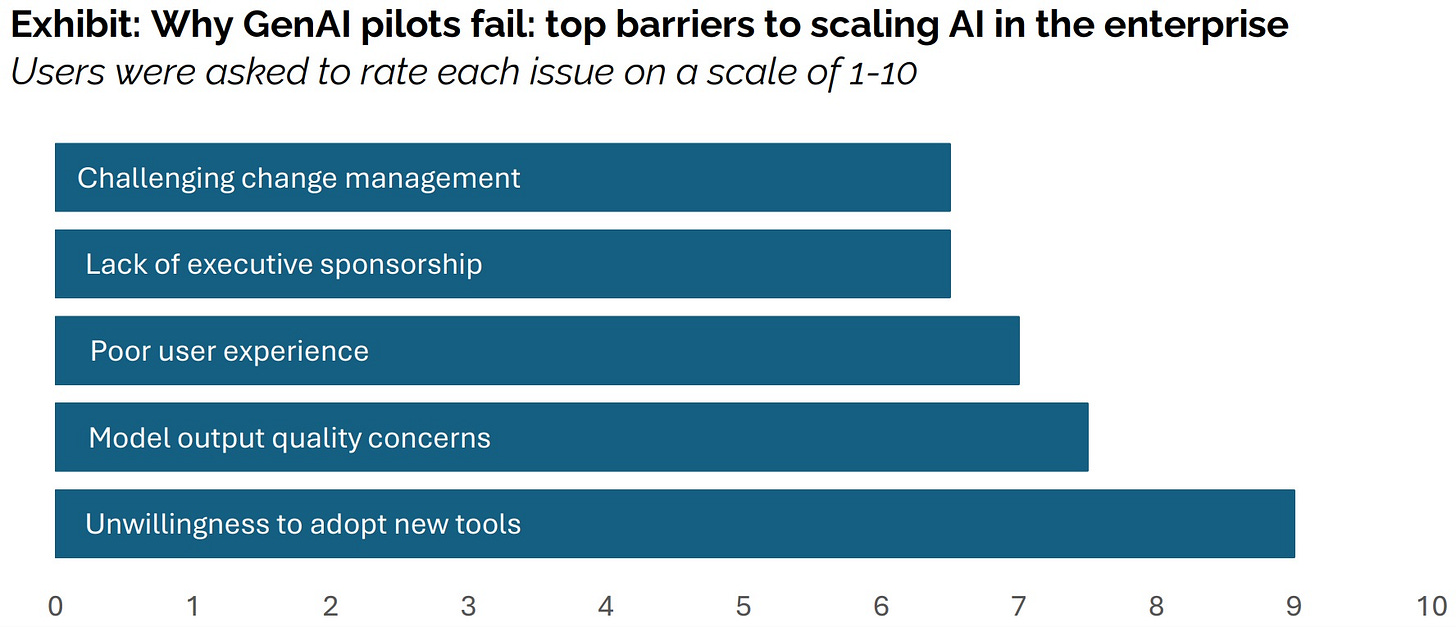

The issue, then, is org-wide training and a keen understanding on what this technology can currently do well and what it cannot. Blanket statements like ‘We need to be an AI-first organization’ or ‘Try to do this task with AI’ is clearly a step in the wrong direction. Agentics is a part of our future, but even the top models fail even the most basic office tasks and have a success rate ranging from around 10-24%. AI everywhere, at least for now, results in costly mistakes that can be difficult to walk back from.

What we need is a no-slop playbook. We need to be able to intelligently employ AI where it is effective, and consistently recognize where it falls short.

The No-Slop Playbook

Navigating the usage of AI in the workplace is difficult, but not impossible.

Here are a few absolute musts that organizations should consider for maintaining excellence and being future-focused:

Create a Shared AI Culture Through Training

Organizations need everyone on the same page with how AI is used, where it works, and where it falls short. Extensive, formal training should be conducted on the benefits (like speed, summarization, drafting) and the numerous drawbacks (context gaps, hallucinations, and cognitive offloading). Without a shared foundation, AI usage can become inconsistent, devolving into workslop being produced and distributed.Mandate Provenance and Transparency

This is becoming easier, but AI-assisted deliverables should be noted as such. AI-generated content should be watermarked with AI usage headers. This practice normalizes honesty and awareness about when and how AI is being used. Every employee should know what C2PA stands for, and should understand why content provenance is important in the age of AI.Quality as ‘Advancing the Task’

Deliverables should move a project forward, either by 1) enabling a decision 2) reducing uncertainty or 3) producing a measurable delta. Outputs should be actionable, rather than decorative. Quality over quantity is key, with slop being ruthlessly sniffed out and discouraged.Audited AI Use

AI should be applied with judgment, and this should be actively encouraged at every level of an organization. Teams need to employ common sense, using the training they’ve received, to decide when AI is helping and when it is hindering projects and tasks. At the same time, companies should conduct regular AI audits, reviewing how tools are being used, refining guardrails, and streamlining practices to ensure efficiency to actively avoid slipping into workslop.

These practices on their own are helpful, but each become essential for preserving human agency in the age of AI. The loss of workplace autonomy is not an inevitability of utilizing AI. It is up to businesses to decide what direction they want to take.

Slop Moving Forward

Overwhelmingly, the misuse of GenAI is giving it a bad rap. Lazy deployments and blanket statements about utilizing AI have led us down a path of thinking that AI usage = better. It’s important to remember that the modern world was built without the use of GenAI, and just like any tool it can be wielded for creation or destruction.

Personally, I strongly believe that agent-based intelligence will reshape the workplace. But the technology is nascent, and we have a long road ahead of us before many jobs are fully automated. With patience and some mindfulness, businesses and people can use GenAI to enhance and improve work, not diminish and slopify it. Truly good work stands the test of time, and shoddy work doesn’t.

Together, we can put an end to workslop, and embrace GenAI for its strengths while recognizing its shortcomings. It just requires a little human brainpower.

Thanks for reading.

- Chris