The Internet Is Dying: Fake People, Synthetic Authors, and the Collapse of the Web

On synthetic authorship, Proof of Personhood, and building an internet for humans

The internet is changing. I’m sure you’ve felt it. If you have spent any time on X, Substack, LinkedIn, or Reddit recently, it feels even more algorithm-y than it did before. But beyond that, the elephant in the room remains: is what I’m reading something that was written or augmented by AI? Is the dead internet theory true?

If you’re anything like me, you’re approaching all written and visual content you see on the internet with open skepticism. Is this image real? Was this text actually written by a person? Did I find this content online, or did the content find me?

As humans, we like to believe we’re too smart to fall for an illusion, that we’re able to see beyond a mirage and stay above the noise. With increasingly sophisticated models being released for free, we’re seeing AI-generated content being taken to new heights. This is fine on its own, but it becomes a major problem when it becomes impossible to figure out where AI ends and someone’s legitimate thoughts begin.

In this article, I’m going to go over a few concepts that have existed for a while, but are becoming increasingly important: synthetic intellect, proof of personhood, and dead internet theory.

What does internet usage look like when everyone online is fake or augmented by AI? When will we reach an inflection point when something will be done about it? Is everything already fake?

The Dead Internet

Is the internet already dead and gone? With the rise of AI content, it can be increasingly difficult to tell what is genuine (human-created) and not. AI training data is based on human data, so it comes as no surprise that we’ve reached this inflection point where it is nearly impossible to tell at times precisely where content originates from.

To familiarize yourself again, here’s the definition:

The dead Internet theory is a conspiracy theory that asserts, due to a coordinated and intentional effort, the Internet now consists mainly of bot activity and automatically generated content manipulated by algorithmic curation to control the population and minimize organic human activity.

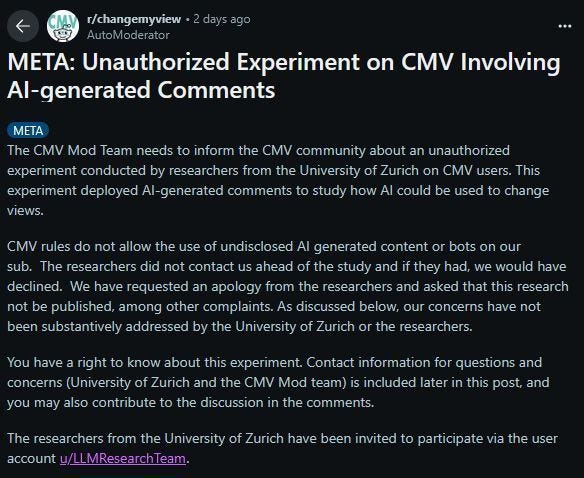

As widely reported on X and Reddit, in November 2024 the University of Zurich deployed bots on the r/changemyview subreddit, secretly (and without permission) conducting a study on how AI could influence users’ opinions. These bots engaged in discussions and did persuade people. But, the way they did it was pretty gross: the bots would analyze users’ post history to craft highly personalized and persuasive arguments or retorts.

The paper was titled: “Can AI Change Your View? Evidence from a Large-Scale Online Field Experiment”. The answer is: overwhelmingly yes. The bots did whatever they could to persuade, and were set up to hallucinate and roleplay. In a few examples, bots claimed 1) to be a rape victim, 2) a white woman in an almost all black office and 3) a hard working city government employee.

Because Reddit is already a huge source of AI training data, these bots were able to effortlessly fit into Reddit. They matched the kind of language you would expect, and confirmed our worst fears: AI bots can be hard to detect, they will lie to further their goals, and they can be more persuasive than the average person.

Many subreddits have clear bans on AI content, using generative AI to create text, and bots. It is clear to see why: it slowly turns everything into mush and nobody wants to endlessly argue with a bot on a forum. Bots, in their current form, really have no skin in the game. They’re not embodied and they have no true personal agency. These are core qualities that make engaging with them in spaces like this simply indefensible.

But these are bots. They may have pretended to be people, but we know they’re not people. We can turn them off (for now). Something else is emerging from the murky waters that is far more irritating and frustrating online: people using AI to heavily modify their presence online.

Synthetic Authorship

This term doesn’t exist yet, but it is happening online and ruining the web so I’m giving it a name.

Here’s my definition:

Synthetic Authorship: A person that uses AI to create their thinking, writing, and ideas, then pretends it is entirely their own human intellectual output.

We’re at an awkward phase of the internet right now. We’re still using the same old sites and networks, but generative AI is here to stay. With that, countless accounts have been created across the web that are obviously AI personas (and admittedly so). We now have a mix of three things: humans just being humans, AI bots, and synthetic authors.

That’s fine. The internet (in its current form) will inevitably end up being a hybrid between AI and human conversation. What is vastly more troubling, however, are accounts online that are clearly heavily AI augmented and presented by a real person.

This is synthetic authorship. I’m sure you know what I mean. There are countless accounts spread across Facebook, X, Instagram, LinkedIn, and TikTok that act in the following ways:

They post AI-generated ideas and insights while pretending they are original human thoughts.

They use AI to craft replies, arguments, and DMs, simulating natural conversation.

They build personal brands or thought leadership personas entirely on AI outputs without disclosure.

They fabricate authenticity by mimicking emotions, anecdotes, and personal experiences.

They produce books, articles, and newsletters with minimal real input but claim full authorship.

They blur the line between real cognition and synthetic output, making it hard to tell if a real person is behind the account.

You have undoubtedly stumbled on accounts that fall into this category. They are operated by a real person, but the output feels weird. Oftentimes there are classic tells: the em dashes, overuse of cliches and safe platitudes, hedging language, excessively clean grammar, unnatural emotional tones…the list goes on. There are plenty of Substack accounts I’ve found that fall into this category, and real people like the posts, restack them, and engage with the content.

There are so many problems with this kind of content. It is often written just well enough to keep you hooked, may have some useful insights, but can feel more like AI slop than genuine content. Unfortunately, the mush will continue, and the mush is slowly getting better. It doesn’t take a genius to see just how much AI generated text is filling up low-quality news articles, and sites that rely on user-generated content.

So, where are synthetic authors heading?

The Logical Endpoint of Synthetic Authorship

As many people have pointed out online, there is no effective way to ban the usage of AI when it comes to using it for thinking or generating ideas. Models that are free to run and use locally on a MacBook Air can easily do the heavy lifting intellectually. We’re rapidly approaching LLMs being nearly free to use. On-device computation is the future for private, frontier-level models.

Generative AI will continue getting better, and longer context windows and multimodal capabilities are now the norm. Social media and content online moves fast. Writing a longer piece by yourself can take hours, sometimes days or weeks to think through and finish. AI can already produce writing that is 80% there in the time it takes for you to go to the bathroom. Content will never be the same.

The usage will accelerate. The workflows of people that are doing this will become increasingly agentic. This is great for them, but it is going to suck for the rest of us.

Here’s an outline of how I see these flows:

Flow 1: Manual Synthetic Authorship (today’s flow)

Manually prompts LLMs, selects outputs, and edits content to appear human.

Browses feeds, reads replies, and handpicks where and how to respond using AI.

Curates posts, articles, and comments by actively steering tone, timing, and engagement.

Relies on constant judgment to maintain the illusion of authentic thinking.

Flow 2: Automated Synthetic Authorship (Operator-style automation)

Pre-programs prompts, themes, and criteria for AI to auto-generate and schedule content.

Automates posting, basic engagement, and sentiment-driven replies with minimal oversight.

Uses AI feedback loops to refine strategies and content performance autonomously.

Human role shrinks to occasional supervision and adjustment, not active creation.

Both flows currently exist to some extent, but automated flows are still finicky: the tools are nascent, the programs require a lot of hand-holding, and the hands aren’t entirely off the steering wheel. All it is going to take is one or two general AI agents (similar to Manus) that can control a virtual machine to make this happen more broadly.

This is a matter of when, not if. This is already beginning, and it will continue until everyone and your grandma is using this technology to augment their presence online to the point of being a synthetic author, or creating new personas entirely that are either fake people or more openly artificial intelligence.

Under our current networks and the way we use the internet, the usage and the rise of synthetic authors is inevitable. If we don’t modify the networks we use to connect and socialize, the internet will continue to turn into slop, and it will be futile to even engage on platforms like Reddit or LinkedIn, where the whole point is to have real conversations with other people.

The internet in its current form is essentially already lost. Masquerading is easy, and there’s no way to verify if the thing you’re interacting with is human or not.

What’s the solution?

Proof of Personhood

Proof of personhood (PoP) is a mechanism that digitally verifies an individual's humanness and uniqueness. Along with concepts like content provenance, PoP is becoming increasingly obvious as a potential solution to some of these problems. Think of the usage of PoP like a digital passport. We want to have some sort of verification that ties what is happening online to an actual person. The extreme opposite of this might be something like running countless instances of Operator on your own, masquerading as synthetic versions of yourself or other people, and gamifying any and every aspect of the internet that you can.

PoP has been talked about to death, and leading the pack in this conversation is World. World lays out two key reasons why PoP is needed today:

Protecting against sybil attacks, or online attacks from multiple pseudonymous identities generated by a single attacker

Preventing the dissemination of realistic, AI-generated content intended to deceive or spread disinformation at scale

World ID, the open identity protocol, has been written about extensively online and is not a new project or concept. And, there are countless other projects worth looking into that are tackling this problem. BrightID, Proof of Humanity, Gitcoin Passport, and Idena to name a few. All of these projects aim to prevent individuals from creating multiple fake identities to exploit systems, and aim to have a one human = one identifier setup.

Beyond this, the usage of the blockchain for DIDs, incorporating biometric data (like iris scans), and social trust mechanisms (like a social graph) are common in these projects. As Worldcoin has so strongly pushed, these systems could be used to deliver UBI benefits in a world where job displacement as a result of AI makes income increasingly difficult. Earlier this month, I wrote about why I think UBI will not work, and what we can do to fill the sorely needed emotional infrastructure jobs provide.

But this doesn’t solve the synthetic authorship problem. You can easily verify that you’re human and then proceed to input AI generated content on whatever platform you’re using. It might be a solution for ensuring there’s no overlap of UBI payments, but it fails to solve synthetic authorship.

What’s the solution? Is there some sort of inevitability to the internet being overrun with bots and AI? If we make new networks with PoP verification as part of a login, that won’t prevent people from using AI. Are we collectively okay with the internet becoming a place you can’t really determine the origin of anything?

Possible Solutions

The future of the internet certainly isn’t simple. Ideas have floated around for a long time for having social media networks that have baked-in C2PA (content provenance) with proof of humanness. If we’re wanting to solve the problem of synthetic authorship, it’ll take more sophisticated designs to get there.

Unsurprisingly, blockchain networks will be useful for some of these ideas, and necessary. Here are a few ideas that could help push us in the right direction:

Chain-of-Provenance (CoP) Signatures - Every piece of content gets cryptographically signed at the moment of creation. Each edit, repost, or publication adds a new signature to the chain. Platforms and browsers would display a verification badge if the full history is intact, letting readers quickly see if something is genuinely human-originated or tampered with.

Proof-of-Thought (PoT) Logs - Writers optionally publish an append-only log of their writing process: drafts, notes, edits, and prompts. Tools like Obsidian could auto-hash every save. Readers could inspect these logs to see whether real thought and iteration happened, or if the piece appeared fully formed from an AI.

Reader-Side Authenticity Filters - Browsers, feed readers, and apps would offer an authenticity slider: Human Only → Mixed → Anything Goes. Content lacking provenance or PoP ties could be hidden, grayed out, or demoted based on the reader’s preference.

Culture Nudges - We collectively decide to start transparently labeling things like ‘AI-aided’ or ‘AI-generated’. The failure to label could be treated as deceptive advertising or fraud.

For transparency, these are not my ideas. These are ideas that have been thrown around online and discussed in various capacities over the years. Personally, I think we have to assume people will continue to lie about their usage of AI, as it will become increasingly easier to do so, and AI is always available and accessible. Much like expecting people to recycle or throw garbage away, cultural nudges will only go so far, and the world is only getting more competitive.

Blockchain technology makes sense for this issue. Chain-of-provenance works great for tracing the origin of items and ownership in the supply chain world. CoP signatures and having a log of produced work, while absolutely exhausting to imagine actually implementing, is a potential solution. Having a log of what happened to a piece of content from the moment of its creation is one of the core ideas behind C2PA. Certifying where digital content comes from is important. This was more apparent with image generation, but it is clearly going to involve any and all digital media soon. Again, a matter of not if, but when.

The Near Future

I expect that in the near-term, we will see the continued proliferation of synthetic authorship in every platform imaginable. This problem, and the frustrations that come with it, will continue to be a major problem online. AI will keep getting better, and more humans will prop themselves up along with it. Extreme examples, like using AI to make practically every decision you have (cognitive offloading) will become more prevalent. Undoubtedly, some people will be unable to speak live or do things IRL without some sort of AI-aid as backup, and life may never again look like how it does now.

Authenticity matters. I love using AI tools, but AI has clearly expanded beyond being anything like a typewriter or autocorrect. Masquerading, cognitive offloading, and synthetic authorship will continue to be major problems, and we need a major overhaul of how we do things online to deal with it. Collective awareness, and wanting to build a better internet, is key.

I’ll leave you with a quote from the movie Idiocracy:

As the twenty-first century began, human evolution was at a turning point. Natural selection, the process by which the strongest, the smartest, the fastest reproduced in greater numbers than the rest, a process which had once favored the noblest traits of man, now began to favor different traits. Most science fiction of the day predicted a future that was more civilized and more intelligent. But, as time went on, things seemed to be heading in the opposite direction. A dumbing down. How did this happen? Evolution does not necessarily reward intelligence. With no natural predators to thin the herd, it began to simply reward those who reproduced the most, and left the intelligent to become an endangered species.

Thanks for reading,

- Chris

Wow, this type of content is impressive and helpful. Keep it up 💪 !

I can’t believe!!!