A New Kind of Software

On generative UI, adaptive interfacing, agentic UX, and the future of interaction

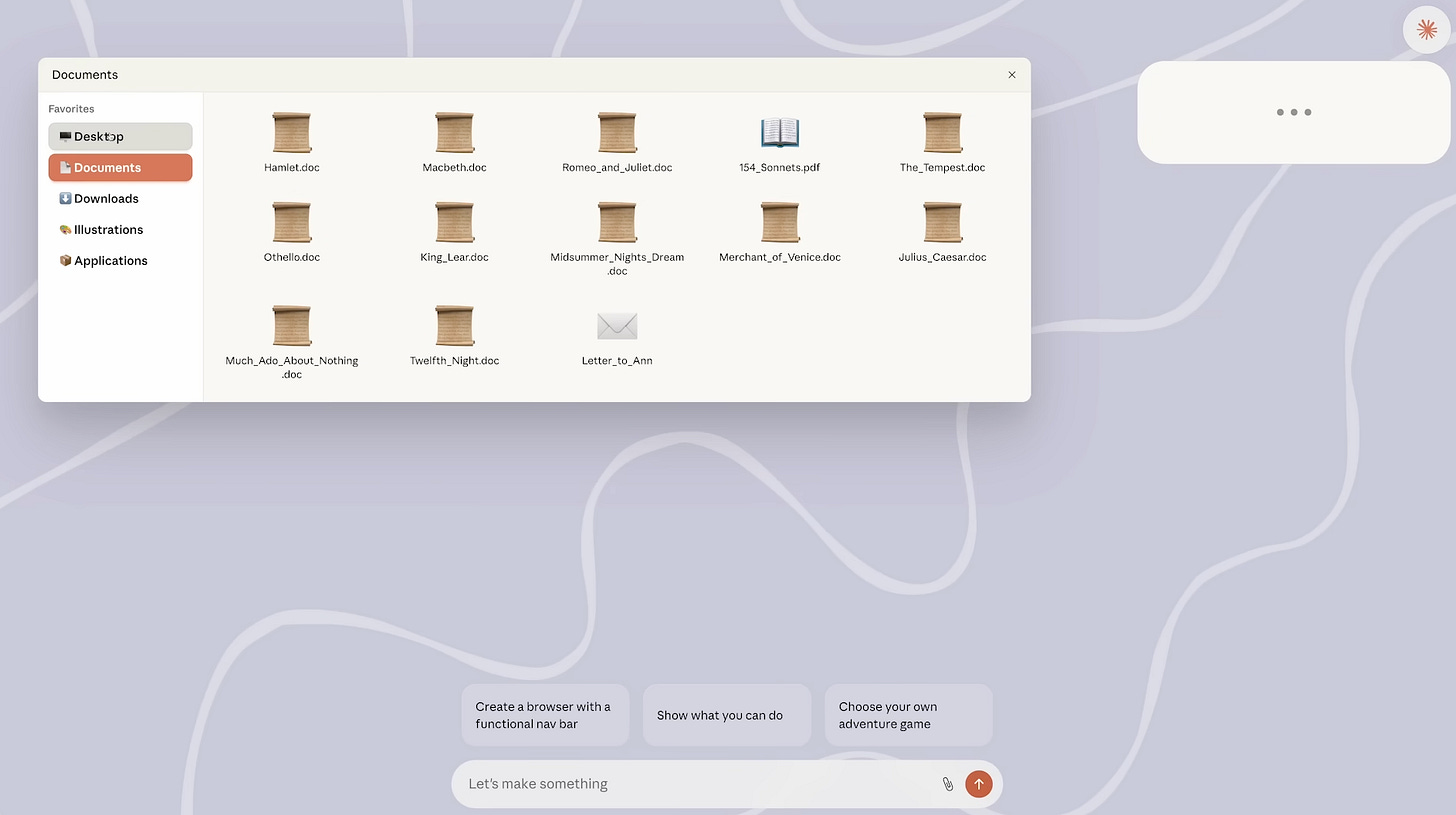

The release of Claude Sonnet 4.5 is certainly exciting; as a frontier model, it is now verifiably the best coding model in the world. But I found the bonus research preview at the end of Anthropic’s announcement most intriguing: ‘Imagine with Claude’.

For years, the fields of generative UI and adaptive interfacing have been very interesting and worthy of discussion, but relatively slow moving. This shifted today.

Generative UI is quickly becoming something to take seriously, and Claude is leading the way. As the video mentions, Claude generates software directly in real time for the user to interact with and interprets context and produces new UI elements on the fly. This is software that generates itself in response to what you need. In many ways this is a logical extension of Software 2.0. This is where software is heading.

Let’s dig into what generative UI (GenUI) is, why adaptive interfacing is important, and how it relates to agentic UX and the burgeoning field of relationship-centered design.

Core Ideas and Definitions

Before we dig into examples of GenUI and adaptive interfacing in action, we need clarity on what these things are. Understanding Claude’s latest experimental feature requires context to truly appreciate.

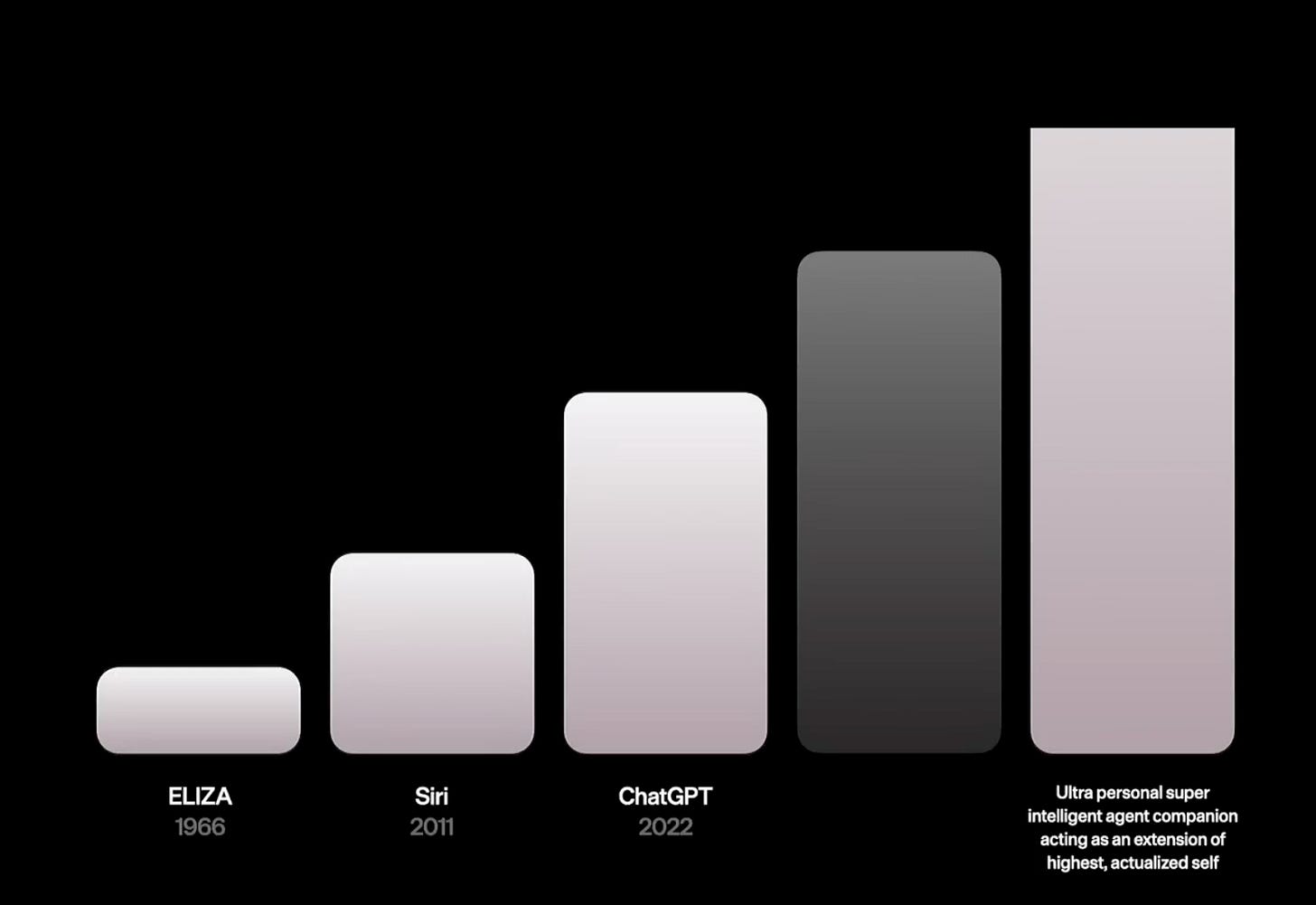

When we think of generative UI, science fiction examples might come to mind, like scenes from the movie Her or Minority Report. In these movies, the interfaces the protagonist interacts with morph and evolve based on what they’re doing. If they’re having a conversation, the interface pulls up relevant materials in real-time. If something is unnecessary, it disappears. These kinds of interfaces, at least in film, seem to have a sort of mind meld with the user. Over time, the interface seems to know what is wanted before the desire is even expressed. Actions happen at the speed of thought. We’ve seen impressive examples of this already with Apple’s Vision Pro’s vision tracking.

Let’s start by defining generative UI.

Generative UI (GenUI)

Definition: Interfaces dynamically generated in real-time by an AI model, customized to user needs and context.

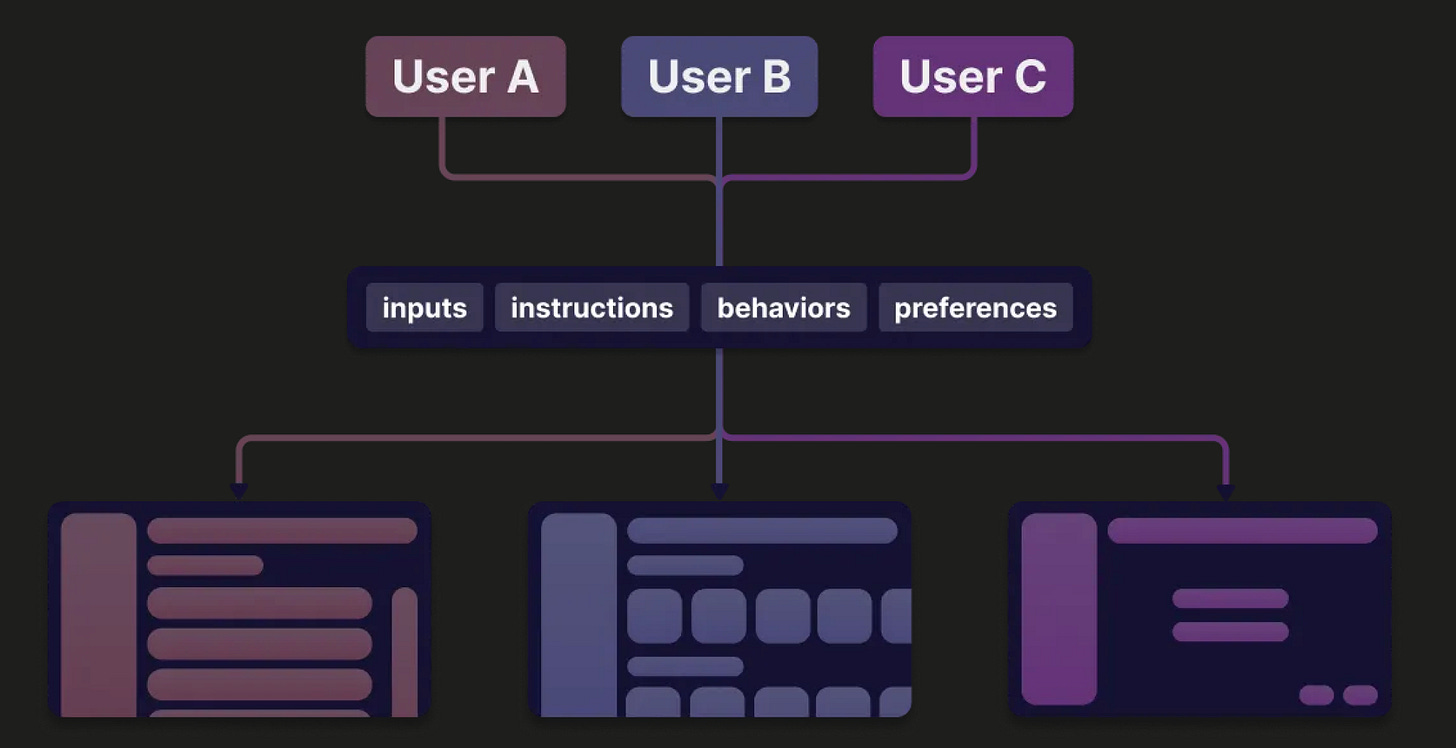

Unlike AI-aided design, where models help designers, the system itself produces the components, layouts, flows, and styling in response to context about the user. It bases the final design on the inputs, instructions, behaviors, and preferences of the user, so each user’s UI looks different.

This term is relatively new. It emerged more formally as a term around 2023, but the concept of adaptive interfacing has been around for much longer. It blurs the line between software as merely a product, and software as a living agent. You could think of early examples of this as dynamic dashboards or front-ends built from configurations and API definitions. The creation and adoption of GenUI entails having a new design language to accompany it.

This is strongly tied to the concept of adaptive interfacing.

Adaptive Interfacing (AUI)

Definition: Systems that continually adjust based on context (time, location, activity, preference, cognitive load, etc).

This goes beyond responsive design (simply screen size, resolution, and adjusting the UI accordingly) and into responsive cognition. The software adapts to you. Context can include the time of day (simpler UI in the evening based on cognitive load), your location (are you at work or home?), past actions, and accessibility.

For software incorporating AUI, longitudinal adaptation is key. UI tweaks are like behaviors, learned over time based on your needs and who you are as an individual. This kind of UI bridges the gap between a one-size-fits-all UI and personalized software. Your Netflix recommendations are personalized, and now your UI is, too.

Agentic AI was the missing piece to make AUI more feasible.

Agentic UX

Definition: User experiences are shaped by AI agents that can plan, reason, and act across a user’s journey.

Traditional UX creates the pathway, leaving the user to do the thinking and coordination through the UI. Agentic UX creates an embedded partner, a built-in AI that is more than an assistant, pulling real weight across tasks.

AI features are shifting from being merely gimmicks to being part of a new UX paradigm. We know that agents can assist with many tasks, from analysis and decision-making to handling workflows and follow-through. The AI is embedded into the experience of the computer interface you’re using, and is actively involved with adapting that interface and experience to better serve your needs.

The field of agentic UX is rapidly evolving, but agents lack deep user grounding and context (currently) to use them as reliably as you might for a person. To close this, agents need to bridge the gap between intelligence and context, and they need to form relationships with the users they are serving.

Relationship-Centered Design

Definition: Applying the principles of human relationships, like boundaries, communication, and shared history, to how AI interfaces evolve with users.

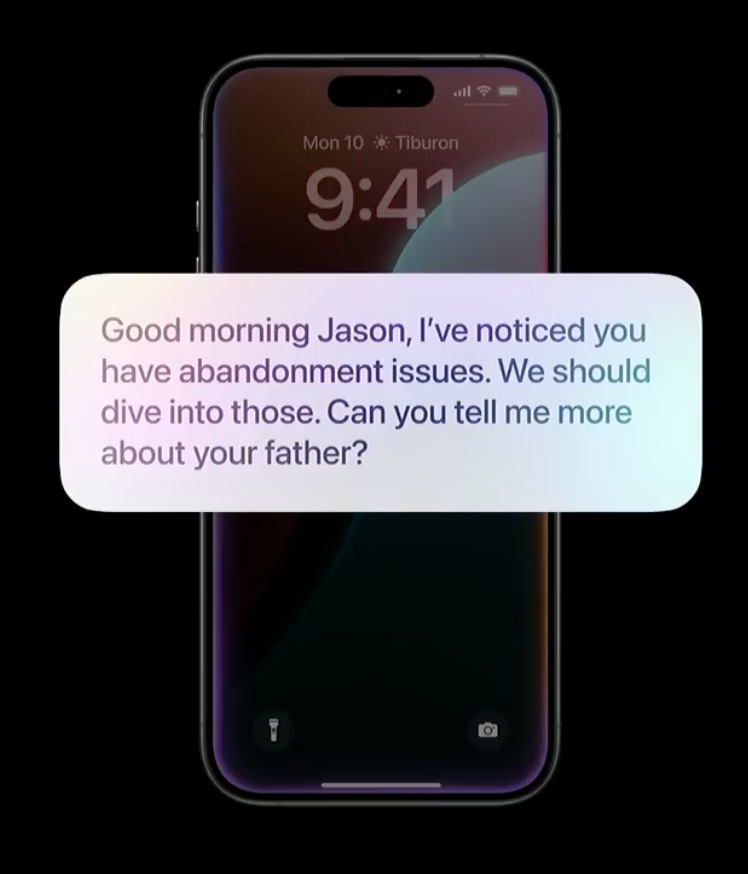

For a richer understanding of relationship-centered design, I highly recommend this Config presentation from 2024. Jason Yuan said the following: ‘this is skeuomorphism for relational design’. Relationship-centered design borrows from how human relationships work to make AI relationships feel intuitive.

When the social boundaries of chatbots and AI systems shift, user behavior shifts. Boundaries + communication + shared history = relationship. The more an AI system knows about you, the better it can work with you. This directly ties into what you’re viewing on the screen, and how you are presented information. As trust accumulates, the gap between intelligence and context shrinks.

Together, these concepts create a flywheel of generative UI.

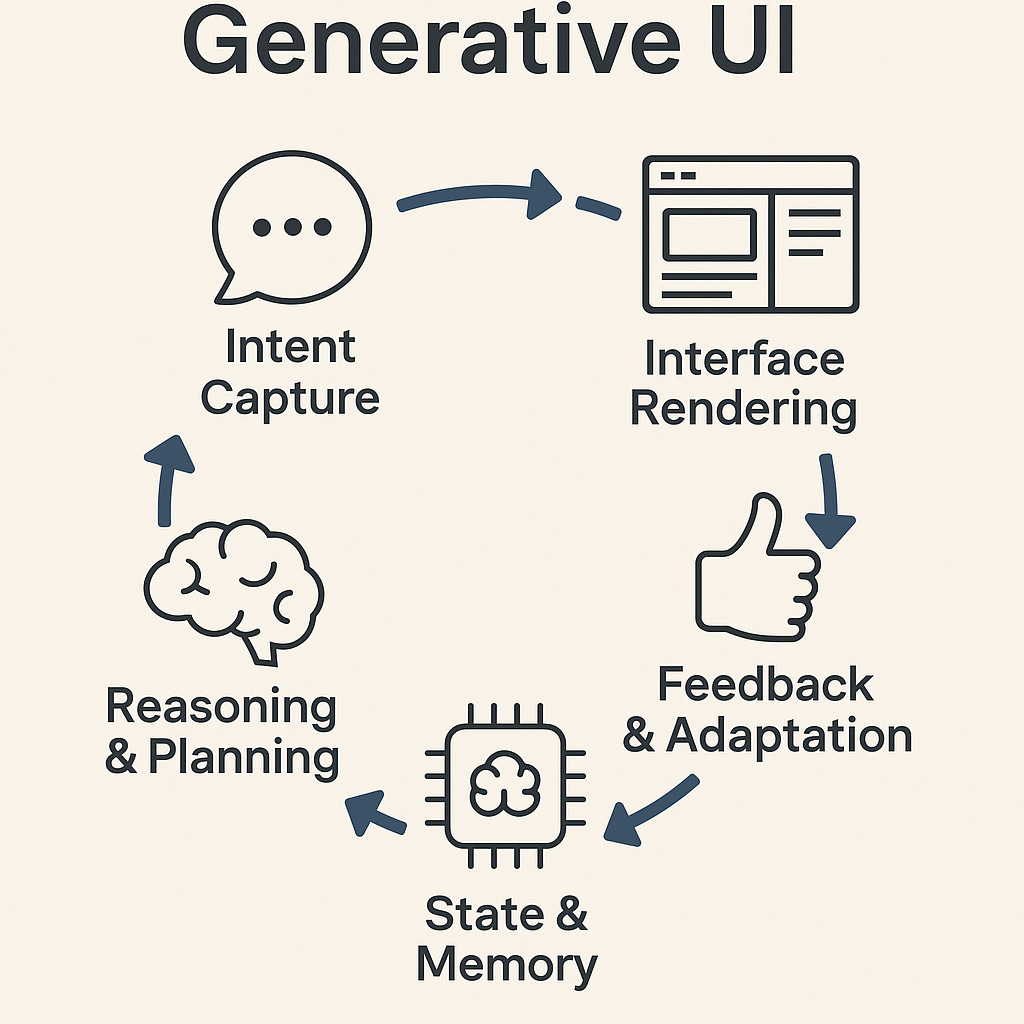

The Flywheel of Generative UI

The flywheel for generative UI and how the user is presented a dynamic interface is evolving daily, but this is roughly what it looks like today:

Intent Capture → Gather context: preferences, natural language, past actions, device signals

State & Memory Management → Merge session context with long-term user history

Reasoning & Planning → LLM decides on modules, workflows, or visualizations

Component Selection & Tool Calls → Choose and call appropriate UI elements or external functions

Interface Rendering → Stream components and flows in near real-time

Feedback & Adaptation → User corrections, engagement patterns, and trust signals refine the mapping for next time

More interactions → more adaptation → smoother and faster experiences. Usage builds a shared history, which leads to (ideally) trust and better responses and ideas.

There are a couple of previous imaginative design and software projects that incorporated these ideas years ago that are worth highlighting. They hint at where the space is heading, and they proved to be prophetic.

Early Examples of GenUI and Adaptive Interfaces

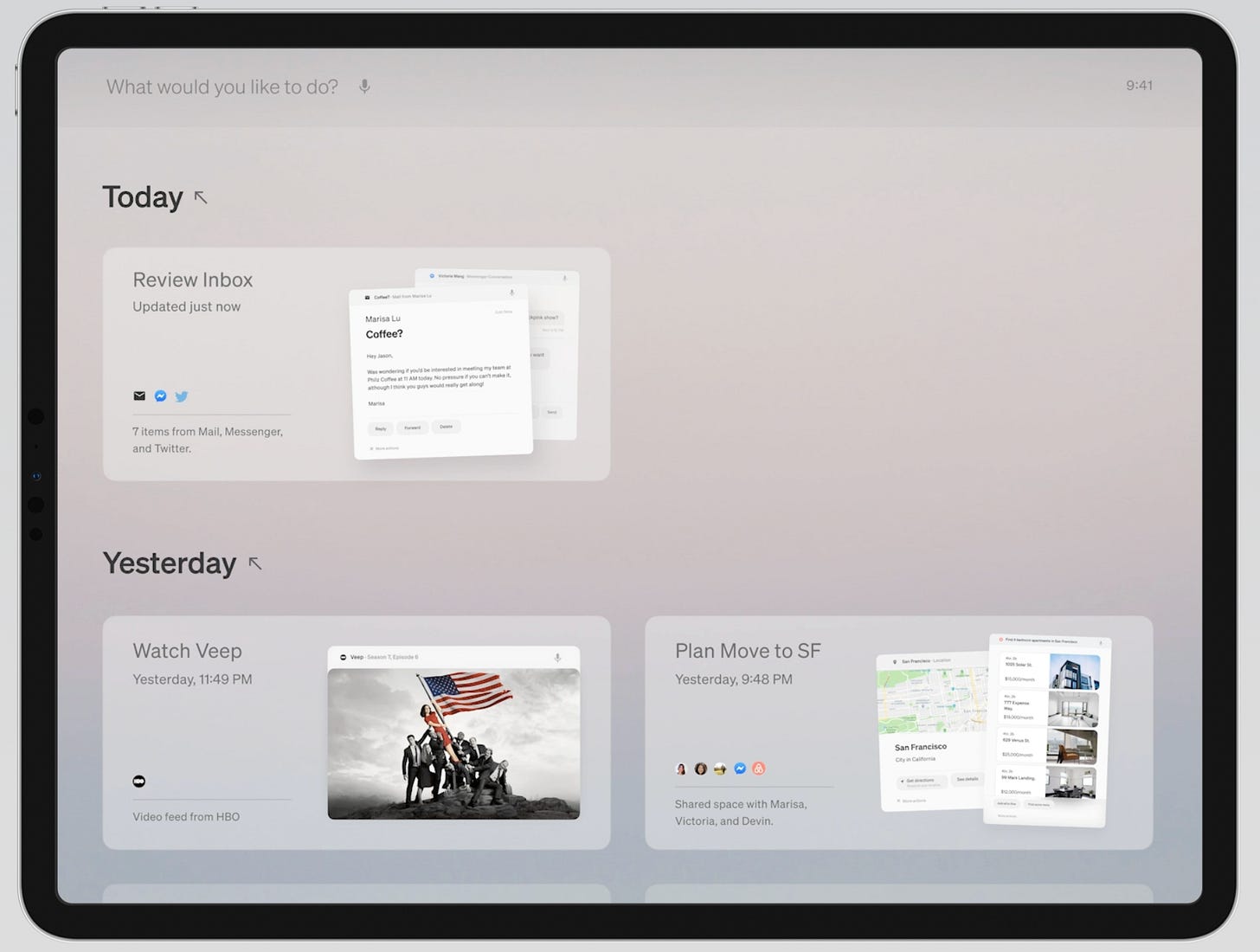

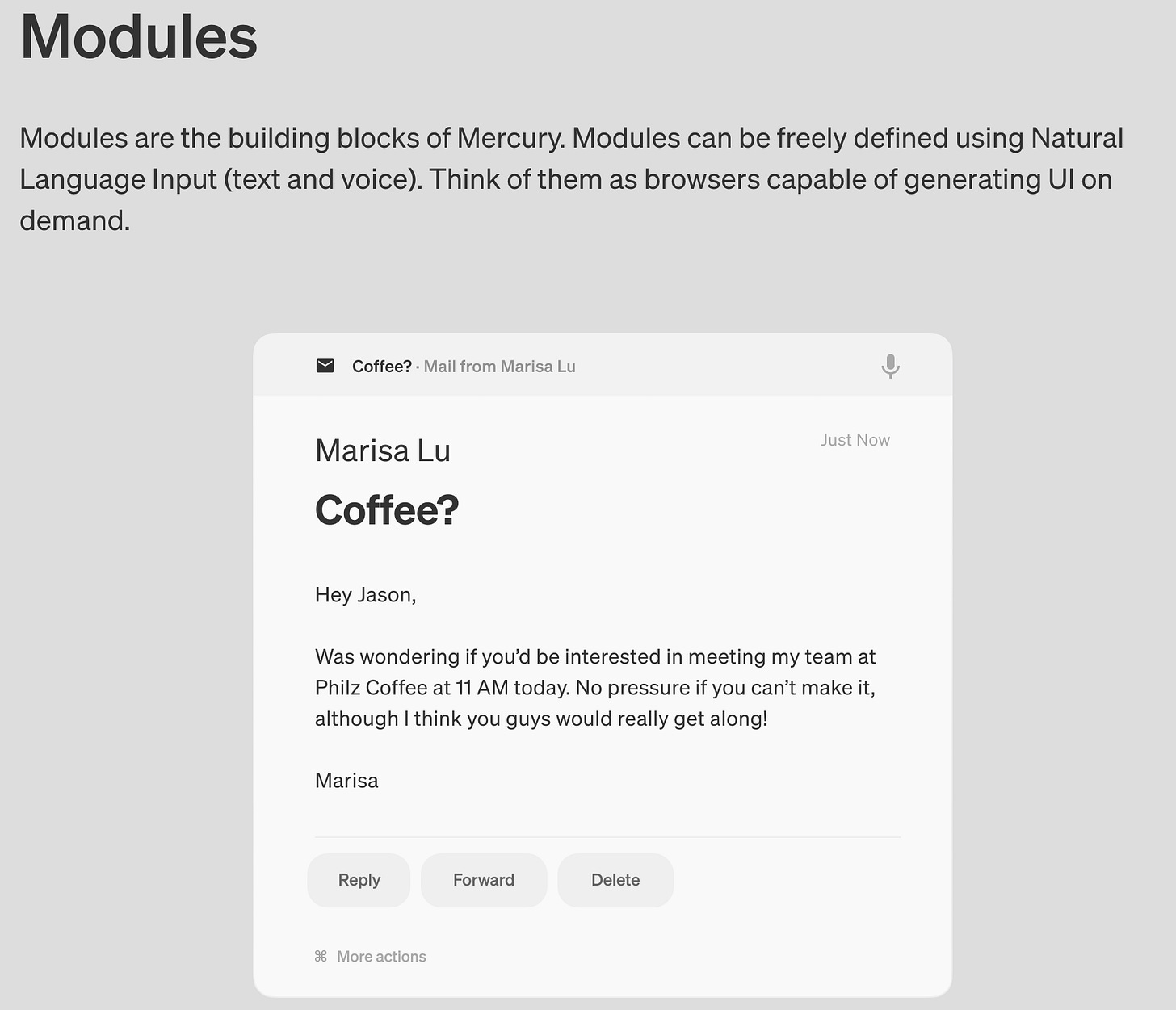

MercuryOS

My favorite example from 2019 is MercuryOS by Jason Yuan. MercuryOS is a speculative OS that rejects the desktop metaphor and app silos, and opts to assemble content and actions fluidly around user intentions. The best way to get a feel for the ideas here is to check out the site.

The architecture is divided up into modules, with chains of modules to extend your focus throughout your session. The motion of the UI guides attention seamlessly, rejecting harsh geometries and prioritizing continuous cognition with minimal friction.

Core Principles

Humane: Inspired by Jef Raskin, Mercury positions itself against the “inhumane” clutter of desktop and mobile OS ecosystems.

Fluid: The system bends around user intent, not the other way around.

Focused: Designed to respect attention spans and reduce overwhelm.

Familiar: Multi-touch and keyboard hybrids, but without the baggage of app metaphors.

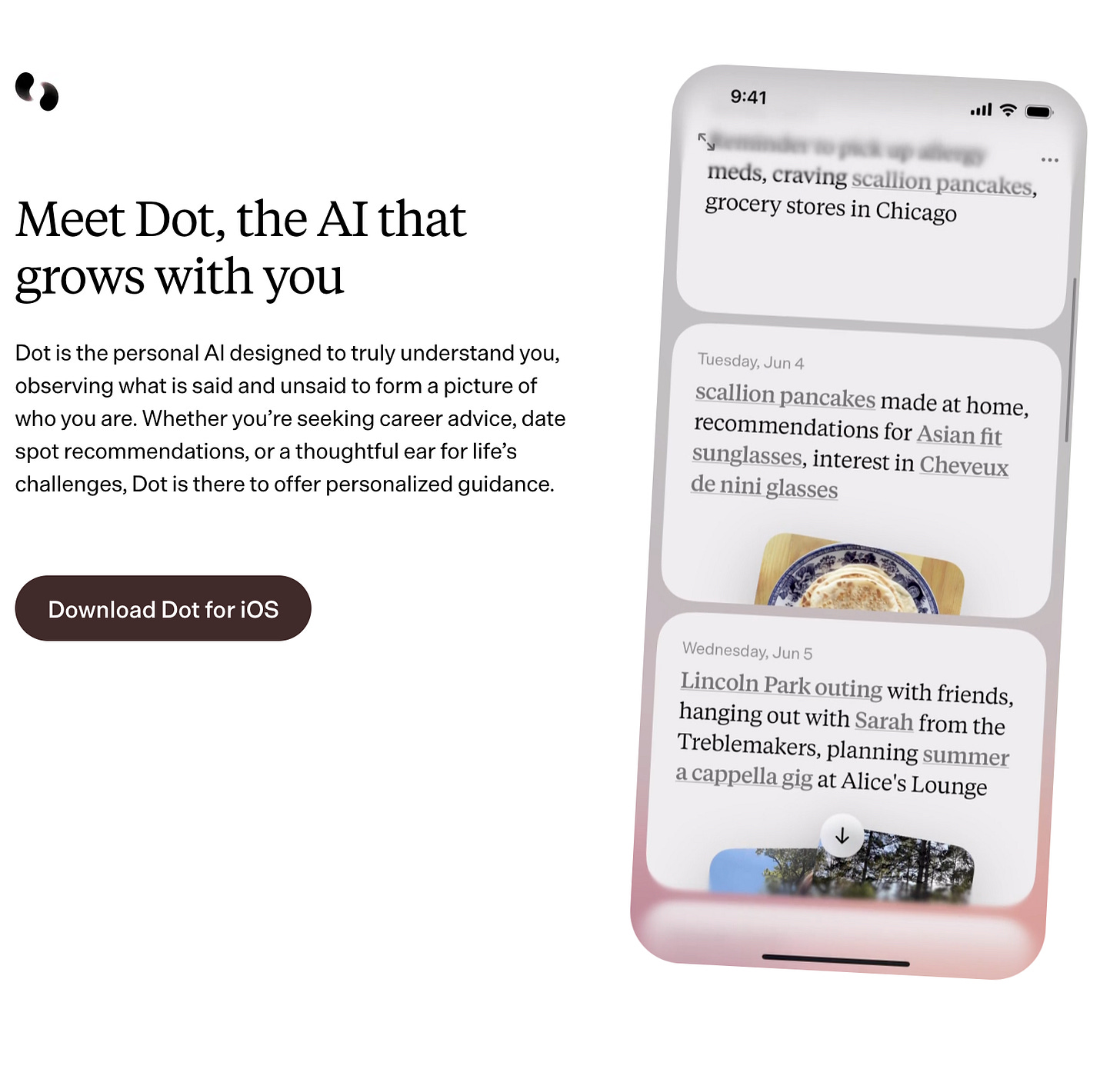

Jason eventually went on to co-found New Computer and Dot, which built on some of the ideas he explored in this project. Dot was incorporating memories and longer context before many other projects were even considering it. Dot was sunset, but certainly inspired other projects in the personalized AI space.

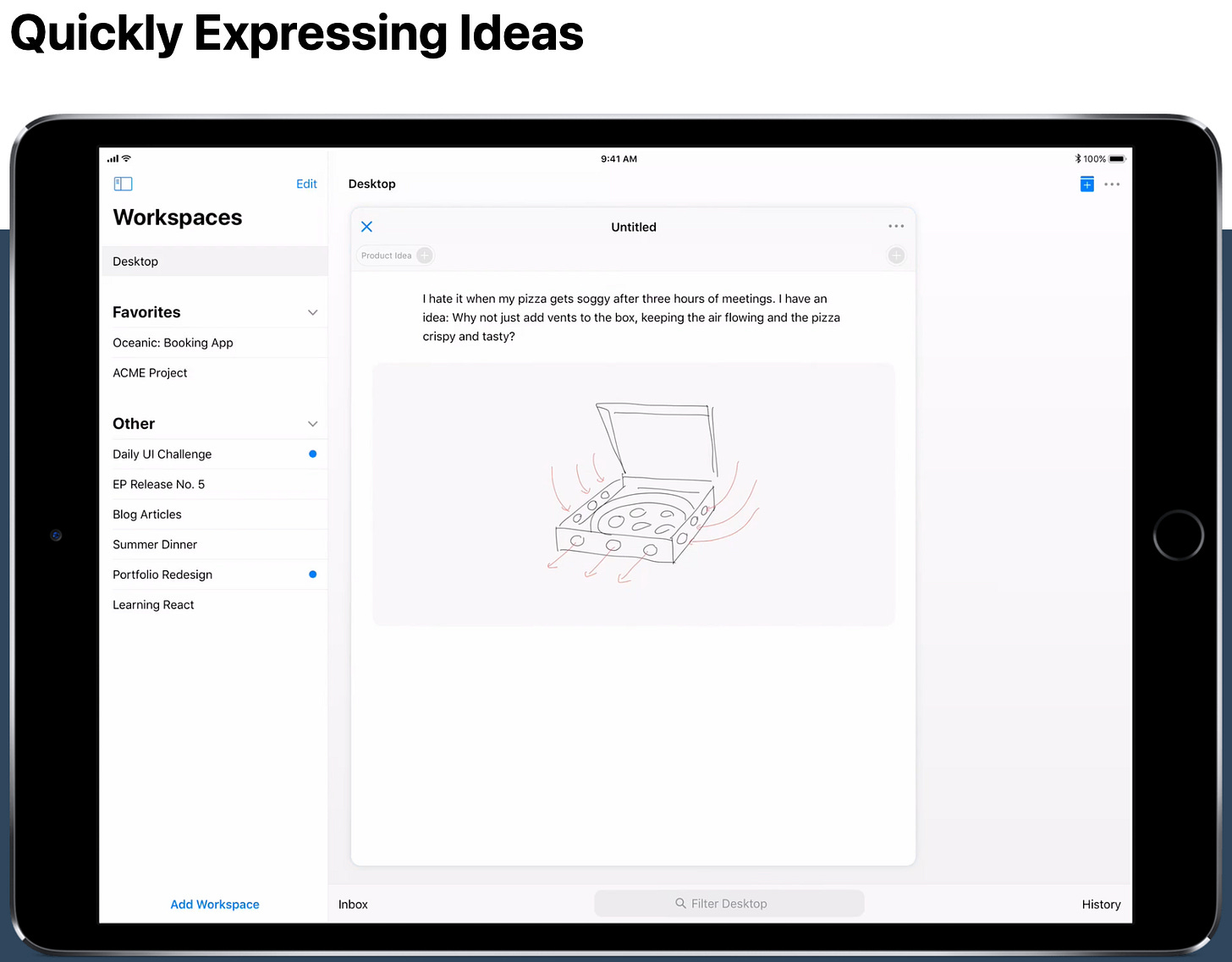

Artifacts

Another intriguing project is Artifacts, a human-centered framework for growing ideas created by Nikolas Klein, Christoph Labacher, and Florian Ludwig. Instead of treating files as static units of data, Artifacts reframes them as documents of meaning. The system is built to help people continually develop ideas, connect fragments, and collaborate in ways that mirror how humans think and create. Truly human-centered design.

The framework replaces folder hierarchies with contextual documents that let ideas evolve fluidly while remaining navigable. Artifacts is more akin to a thinking environment, built to support long-term creative work.

Core Concepts

Documents as Meaning → Each document represents one idea and can mix text, sketches, images, or models.

Open Organization → Loose associations, tags, and links mirror human associative thinking.

Contextual Memory → Documents retain history and relational ties for retrieval.

Collaboration → Built for co-creation, annotations, and shared idea-building.

As AI evolves, how we imagine UI and interacting with not only AI, but computers, evolves. Like MercuryOS, this project points towards a future that frames digital environments around meaning and collaboration instead of apps and folders.

This leads us to Claude’s latest experiment.

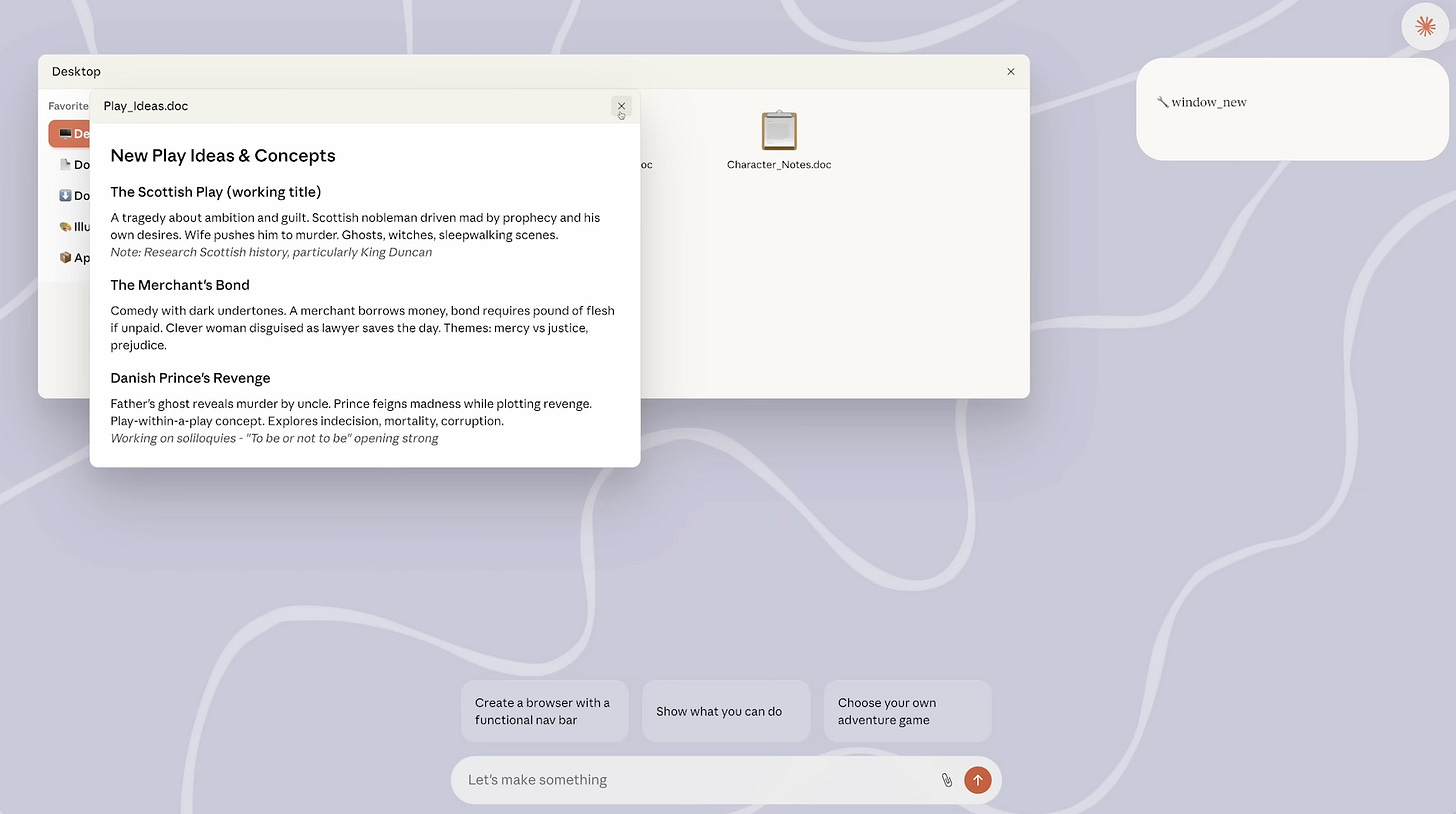

Imagine with Claude

The best way to visualize this new experiment is to simply watch the video. Although this is still an experiment (and may not make it to the prime time for a while), Anthropic shipping this as a research preview validates the field.

Claude demonstrates the direct generation of UI without a middle step, with the system interpreting intent + context → rendering new interface elements immediately.

The user expresses an intent (click, text, voice)

The system interprets context and renders interface elements

No prewritten functions or screen flows, with UI emerging on demand as you interact

We will soon see both the exciting aspects of this type of UI within Claude, and also its limitations for both users and developers.

The Road Ahead

For users, there’s a lot to be excited about when it comes to adaptive interfaces and generative UI. From seamless personalization to increased accessibility, we will soon be able to do more with less, and faster. For many apps, we will look back and wonder how we even used them before this evolution. It will feel both natural and obvious in hindsight.

For companies, being able to offer increased personalization and even better experiences will increase retention and increase conversions. With so much of user churn stemming from poor UX, this shift could be groundbreaking.

Lastly, for developers and designers, this could mean less repetitive UI work. More time can be spent on nailing the basics, with modularity and components being at the center of it all. Prototyping will be able to happen faster, and entirely new frameworks will be developed.

This, in conjunction with multimodal UI and relationship-centric computing, has the possibility to raise the bar for app quality. GenUI libraries will evolve to be very plug and play, and this will evolve in lock-step with no-code development and vibecoding platforms. Faster software, more intuitive, and more accessible.

That isn’t to say this won’t be without major challenges. Users require stability, and having a consistent UI is incredibly important. If LLMs fabricate unusable components, what’s the point? Asking for additional information from users makes apps inherently less privacy focused, and an emphasis may need to be placed on running more optimized small language models to reduce cost. The last thing we want are overly invasive programs that make people uncomfortable and barely improve the user experience.

The future of computer interaction is going to be intuitive, fast, and unlike anything we’ve ever experienced. We just need to not mess it up.

Thanks for reading.

- Chris