Everyday Cyborgs: Apple’s Vision Pro, Brain-Computer Interfaces, and Consumer Mind Reading

Revolutionizing Human-Technology Interaction: A Glimpse into the Next Era of Personal Computing

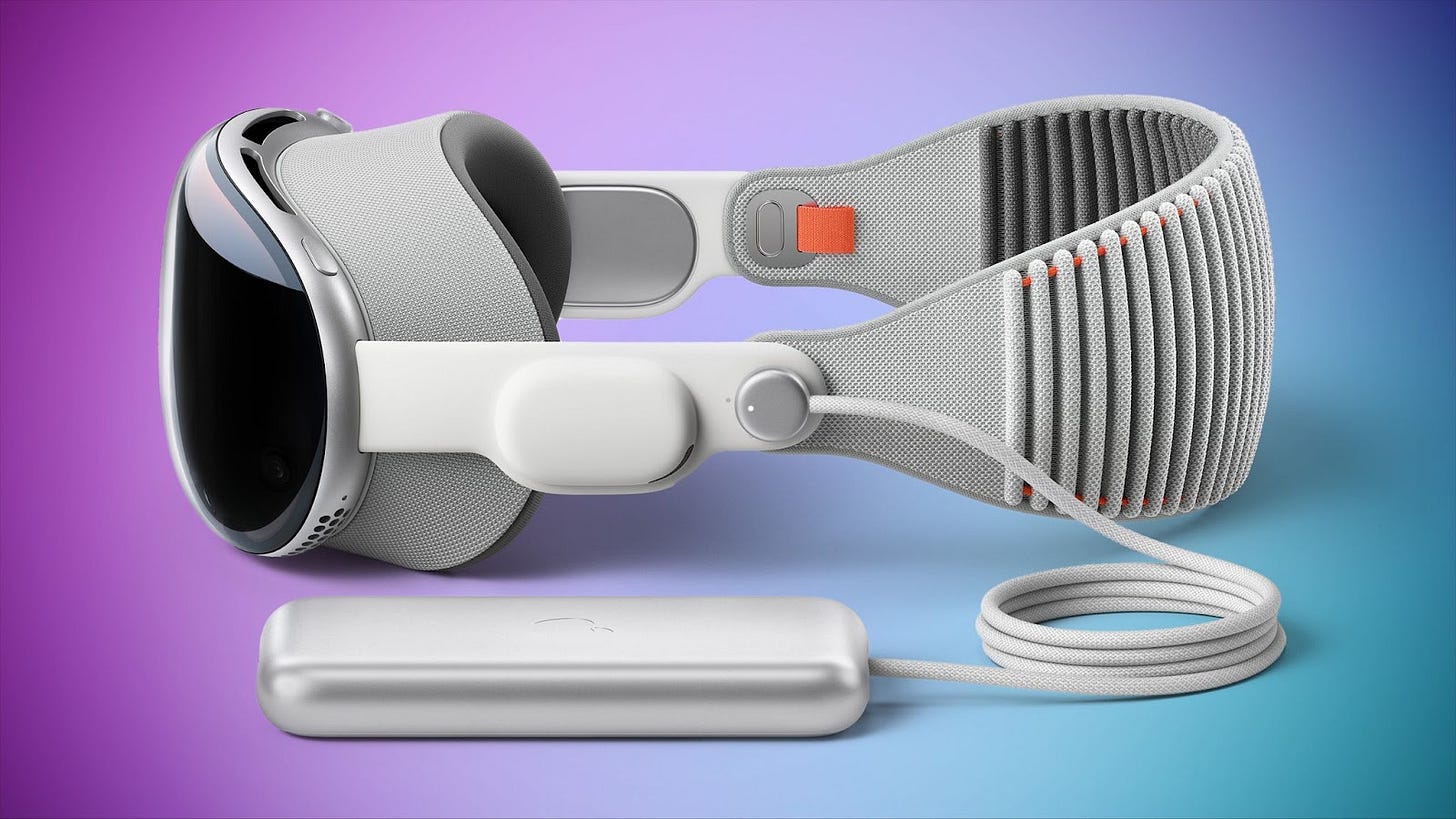

It’s been a couple of weeks since Apple’s announcement of Vision Pro and visionOS, and it’s safe to say that this device is groundbreaking for a multitude of reasons. This article is going to focus on one aspect of this platform that may have come as a shock to many people watching WWDC: mind reading. A multitude of people who have demoed the new headset have noted that, while the device was intuitive and easy to operate, they felt like the device knew what they wanted to do before they realized they wanted to do it.

Apple has created the first accessible, mass-produced, non-invasive consumer brain-computer interface (BCI). Other companies have invented and paved the way for this, but it appears the dawn of consumer-grade BCI-influenced spatial computing devices is finally upon us.

So, why is this important? Why is the future of computing intertwined with neurofeedback, AI, and wearable-tech? In this article we’ll touch on how we got here, how Apple’s new headset is changing how we think about computers, and significant ethical considerations as we move forward.

Context is Everything: Bionics, Cyborgs, and Wearable Computers

The journey towards spatial computing, epitomized by Vision Pro, is built on a rich history of innovation in the realms of bionics, wearable computing, and biomechatronics. Although the idea of augmenting or enhancing the human body with technology can be traced back much further, let's consider the key developments from the 1960s onward.

Understanding the Concepts

Bionics and Biomechatronics: Bionics emerged in the mid-20th century as the field concerned with the use of artificial systems to replace or enhance body parts. An advancement of bionics, biomechatronics involves integrating mechanical elements, electronics, and parts of biological organisms into a unified system, such as advanced prosthetics that respond to neural signals.

Wearable Computing: Around the same time as bionics, wearable computing began to take shape. This involved the development of devices that could be worn on the body, thereby often offering a hands-free interaction with technology. Today, we see devices like the Fitbit and Apple Watch as great consumer examples of this technology, while being relatively unintrusive. An undeniably successful example of neuroprosthesis would be a cochlear implant.

Cyborgs: short for cybernetic organism, it refers to people with both biological and artificial parts. Notable individuals, like Neil Harbisson, have integrated technology into their bodies, in his case an antenna that allows him to perceive colors via audible vibrations, exemplifying the idea of a cyborg. One could argue that someone with a cochlear implant is also a cyborg! Cybernetics is one path on humanity’s route to potential immortality as we augment our biological selves.

Here’s a great recent documentary on Human Cyborgs by Moconomy.

While these developments might seem disjunct or simplistic when compared to the capabilities of the Vision Pro, they are part of the continuous technological evolution that has led us here. Vision Pro is the next step in this journey, incorporating the learnings and advancements from these diverse fields. By offering immersive AR/VR experiences and mimicking natural eye behavior, it takes wearable computing and human-machine interaction to the next level. As the first mainstream (non-medical) device augmenting our senses, it embodies the spirit of bionic enhancement and cyborg technology, marking a new era in the symbiosis of humans and machines.

How Vision Pro is Different

Although Vision Pro is the most important extended-reality (XR) device to date, it certainly isn’t the first. Let’s start by comparing Apple's Vision Pro, Google's Google Glass, and Meta's Quest Pro. Each represents an attempt to merge the physical and digital realms, but with different approaches and outcomes. For the sake of simplicity (sorry HoloLens) we will focus on only these three.

Google Glass

Google Glass, launched in 2013, was a pioneering step towards AR wearable devices. Its key features included:

Overlaying digital information onto the real world

Hands-free smartphone-like functionality - capturing photos, recording videos, displaying messages, navigating maps, and web search

Short battery life and lower display quality, constrained by the technology of its time

Issues related to privacy and societal acceptance, especially wearing these out in public. It was weird then, and it's still weird a decade later

Meta's Quest Pro

As Meta's first mixed-reality headset, the Quest Pro is a major upgrade in the VR/AR field. Its features include:

A combination of virtual reality immersion and augmented reality passthrough with holographic overlays

Better comfort for extended usage (although still not great)

Intuitive controllers with self-tracking technology for a full 360-degree range of motion.

TruTouch Haptics for enhanced tactile feedback

Positional audio for increased immersion

Built-in cameras offer an improved version of Passthrough mode, allowing users to see their surroundings

However, the device comes with a high price tag and requires substantial spatial space

Apple's Vision Pro

The Vision Pro from Apple is the latest evolution in wearable tech, pushing the boundaries of VR, AR, and spatial. Its main features are:

Spatial computing which aims to blur the boundaries between VR, AR, and the real world, with higher pixel density screens that are brighter

Groundbreaking eye-tracking, accurately predicting your area of focus to a point where it is essentially anticipating your actions

Eyesight feature, creating an illusion of transparency on the headset's surface, promoting eye contact and breaking VR's typical social isolation

Advanced integration of physical and digital worlds, offering a deeply immersive experience

Some limitations include potentially dim and low-resolution images and restricted viewing angles

Google Glass set the stage for blending digital information with our visual field, Quest Pro took a substantial leap with its mixed-reality offering, and Vision Pro pushes the envelope even further. But how does some of Apple’s latest technology really work?

How Apple’s ‘Mind-Reading’ Works

The neuroscience elements incorporated into Vision Pro herald a seismic shift in tech, transforming our understanding of traditional consumer products and catapulting us closer to a world reminiscent of the scenarios depicted in Black Mirror. The device's predictive capabilities are revolutionary. It goes beyond just tracking physical movements; this technology will evolve into predicting our emotional states and cognitive responses more deeply.

This leap in consumer technology paints a future where devices not only interact with us but also anticipate our needs and responses. The experience of using mixed or virtual reality is evolving; AI models within these devices are constantly attempting to predict your cognitive state.

This formidable technology didn't come easy. It took a lot of meticulous work and clever tricks to make specific predictions possible, some of which are detailed in a collection of patents. One impressive outcome of this effort is the device's ability to predict a user's action before it is performed. This is achieved through biofeedback: monitoring eye behavior, and in real time, modifying the UI to stimulate more of this anticipatory pupil response. This essentially creates a rudimentary brain-computer interface through the eyes—a compelling accomplishment that bypasses the need for invasive brain surgery (but then again what wouldn’t you do for Apple?).

In drawing parallels with the current landscape, these advancements echo the capabilities we're seeing in brain-computer interface (BCI) devices and certain medical technologies. Apple is pushing the boundaries of what's expected from traditional consumer products, transforming them into powerful tools for personal development, insight, and entertainment. While invasive BCI devices like those developed by Neuralink require surgical procedures and are primarily aimed at medical applications, Apple’s headset incorporates non-invasive technology by cleverly employing the usage of eye-tracking and AI.

Why Apple’s Foray Into Any New Market is Indication of Societal Shift

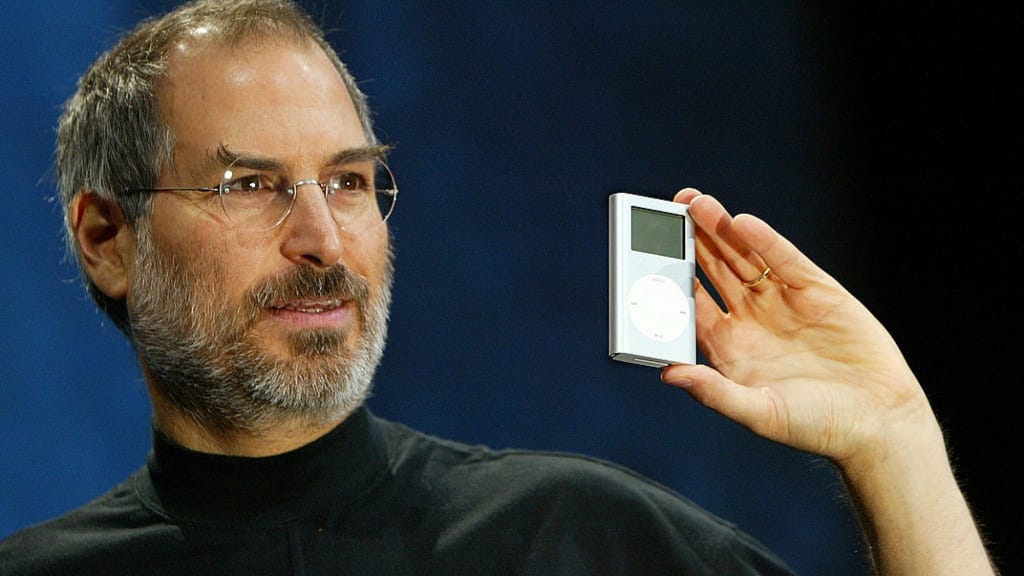

Apple has, time and again, proven itself to be an extraordinary predictor and shaper of technological trends. Steve Jobs was instrumental in this, foreseeing the rise of personal computing in the early 1980s. A look back at Apple's Macintosh line of computers and its sleek, user-friendly laptops serves as a testament to this foresight.

Apple's insight wasn't confined to the realm of conventional personal computing. Its foray into the MP3 player market with the iPod in 2001 was another strategic move. At the time, numerous MP3 players existed, but none could match the user-friendly interface and seamless integration with iTunes that the iPod offered. Apple didn't invent the MP3 player. Instead, they reinvented it and made it better, setting a new standard in the process. I’m sure you’re seeing the pattern here.

Next came the iPhone in 2007, which disrupted the mobile phone market entirely. We all know how this went down. Phones with physical keyboards, like Blackberry, became dinosaurs overnight. Once again, Apple didn't create the smartphone, it reinvented them.

Apple succeeds by:

Creating for themselves: Apple's engineers design products they personally would want to use. This ensures that every creation serves a genuine need and offers value to the user.

Ease of use: Jobs was a firm believer in intuitive design. No matter how advanced or feature-packed an Apple product, it must be easy to understand and use.

Simplicity: Apple often opts for a single model of each product, like the iPhone. This minimizes decision-making for the consumer, making the purchase process less overwhelming.

Innovation: Apple only enters a market if it can make a product better. They're not interested in creating new categories, but in reinventing and improving existing ones.

Apple's spatial entry signifies not only a belief in the market, but also a conviction that they can enhance it. While spatial computing is still developing, Apple's focus on user interface design could be what the VR/AR/XR space needs. Combining this with advancements in screen design, material science, chipset architecture, and batteries, Vision Pro may soon transform the tech landscape, consolidating functions of multiple devices into one revolutionary headset.

Converging Frontiers: BCI, XR, and AI

Music for this section: The Terminator Soundtrack - Main Theme

The intertwining future of Brain-Computer Interfaces (BCIs), Extended Reality (XR), and Artificial Intelligence (AI) will revolutionize our interaction with technology and deepen our understanding of the human brain. Beyond Apple, companies like Neuralink, OpenBCI, Varjo, and Snapchat are leading the way, making strides towards a future where thoughts become machine-readable instructions and virtual experiences respond to our cognitive states. Our cyborg transhumanist future seems inevitable.

A notable milestone by Texas researchers involves an AI system decoding brain activity to translate focused thoughts into text. By pairing a transformer model, like the one driving OpenAI’s ChatGPT, with non-invasive fMRI scans, researchers are able to translate brain patterns into written language. Although not a precise translator of thoughts, it captures the general meaning and could revolutionize communication, particularly for individuals with speech impairments. It's believed that future adaptations of this technology could employ portable brain-imaging systems, making it more practical outside the lab.

Simultaneously, advancements are occurring within BCI and XR technology. Varjo, a Finnish XR headset creator, and OpenBCI recently partnered to incorporate OpenBCI's neural interface, Galea, into Varjo’s Aero VR headset. Galea, equipped with a suite of sensors, collects data from the user's brain and body, offering a real-time glimpse into human reactions to virtual stimuli. This could pave the way for developers to create more dynamic and personalized content, transforming user experience in sectors like gaming, healthcare, research, and interactive media.

Snapchat, too, has entered the BCI realm by acquiring NextMind, a neurotech startup with a compact BCI that can be integrated into the strap of an XR headset. The acquisition is part of Snap's strategy to enhance its AR capabilities, as seen in the Spectacles project.

Neuralink, founded by Elon Musk, has developed the Link system, using electronic implants to decode neural signals and transmit them to computers. The potential is vast. Patients could control external devices like keyboards and mice wirelessly, providing a new communication medium. FDA approval for human clinical trials marks a significant step, despite concerns regarding device safety and long-term stability. They won approval earlier in May.

Muse, an EEG-powered meditation headband, worthy of mention

These developments set the stage for tech giants like Apple to possibly deliver ultimate consumer products incorporating BCI XR technology. Imagine a future iteration of Vision Pro where users interact with their device using thoughts or emotional responses. EEG technology could track neural responses in real-time, providing feedback on mental states and potentially assisting in stress management, meditation, mental health monitoring, and more.

As we push the boundaries of technology, ethical, privacy, and data security questions must be addressed, so let’s think about the implications briefly.

Ethical Considerations and Challenges

Vision Pro has spurred significant ethical considerations and challenges. As we move into the future, we are faced with complex questions regarding individual privacy, societal impact, and potential disruption of our current reality.

The potential future integration of BCI into Vision Pro brings to the forefront concerns about personal privacy and autonomy. As companies gain access to cognitive data through users' brainwaves, it is imperative to understand and control where and how our data is used. This new level of personal data acquisition brings to light the essential question of user autonomy and consent in our increasingly interconnected world.

With the immersion of users into spatial reality, societal concerns loom large. As depicted in Ready Player One, overreliance on spatial computing technologies could result in decreased physical social interactions and a skewed perception of reality. There's a real risk of creating a disconcertingly disconnected society if people spend the majority of their time in augmented or virtual reality.

Takeaways:

Personal Privacy: The integration of BCI technology could lead to an unprecedented level of personal data collection, raising questions about who owns this data and how it can be used

Autonomy and Consent: There are concerns about the extent to which companies can influence user decisions based on insights derived from their cognitive states

Societal Impact: Overreliance on spatial computing technologies could lead to decreased physical social interactions and a potentially disconnected society

Privacy Measures: Apple needs to maintain its commitment to privacy by developing stringent data handling and user consent policies for Vision Pro

Balanced Use of Technology: Companies should encourage responsible and balanced usage of their technologies, designing applications that enhance reality rather than replace it

Moving Forward

The unveiling of Vision Pro marks a significant milestone in the evolution of wearable computers and bionics. We're witnessing the convergence of these technologies into a form that’s user-friendly, intuitive, and appealing, but also quite daunting.

The path forward suggests a future where BCI mechanisms, such as eye-tracking and direct brainwave reading, will become integral to our devices. This shift will fundamentally redefine our interactions, rendering keyboards and mice as antiquated tools. As this technology progresses, more direct neural connections, akin to Neuralink's vision, might become the norm.

Such advancements, while promising, bring along ethical considerations around data privacy, societal impacts, and cognitive health. If navigated wisely, we can avoid a dystopian outcome and instead harness this technology for societal benefit. The steady adoption of this technology provides us the opportunity to shape our future responsibly. Vision Pro symbolizes this impending shift, a reminder of our responsibility as we usher in a new era in computing.

So, what’s your take? Will society embrace more invasive spatial technology sooner rather than later? Are we destined for the utopia of our dreams, or the dystopia of our nightmares? Let me know in the comments below.

Learn More:

https://openbci.com/

https://healthitanalytics.com/news/ai-brain-decoder-system-translates-human-brain-activity

https://aclanthology.org/2021.acl-long.348/

https://www.theguardian.com/technology/2023/may/01/ai-makes-non-invasive-mind-reading-possible-by-turning-thoughts-into-text

https://studyfinds.org/chatgpt-brain-activity-decoder/

https://www.businesstelegraph.co.uk/apples-3499-vision-pro-headset-could-read-your-mind/

https://www.roadtovr.com/snap-bci-next-mind-acquisition/

https://www.roadtovr.com/openbci-brain-computer-interface-ar-vr-galea/

https://github.com/Snapchat/NextMind