The Asynchronous Singularity

Uneven distribution, the reality of the human brain, and the counter to the gentle singularity

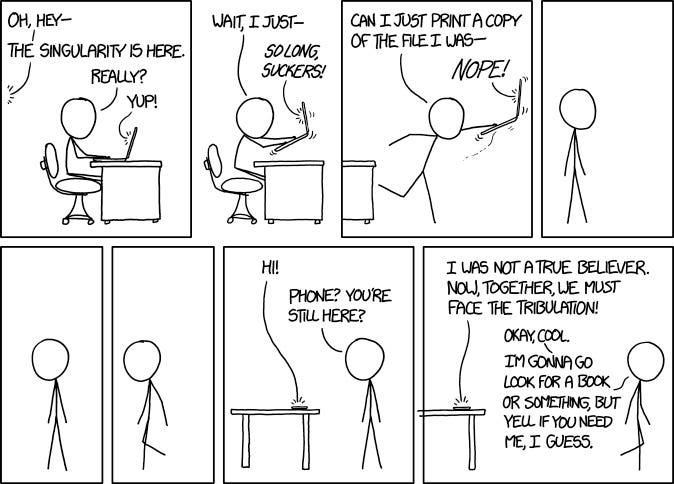

The singularity won’t come in a single moment. It will arrive in fragments, unevenly adopted, and met with resistance. This is the asynchronous singularity.

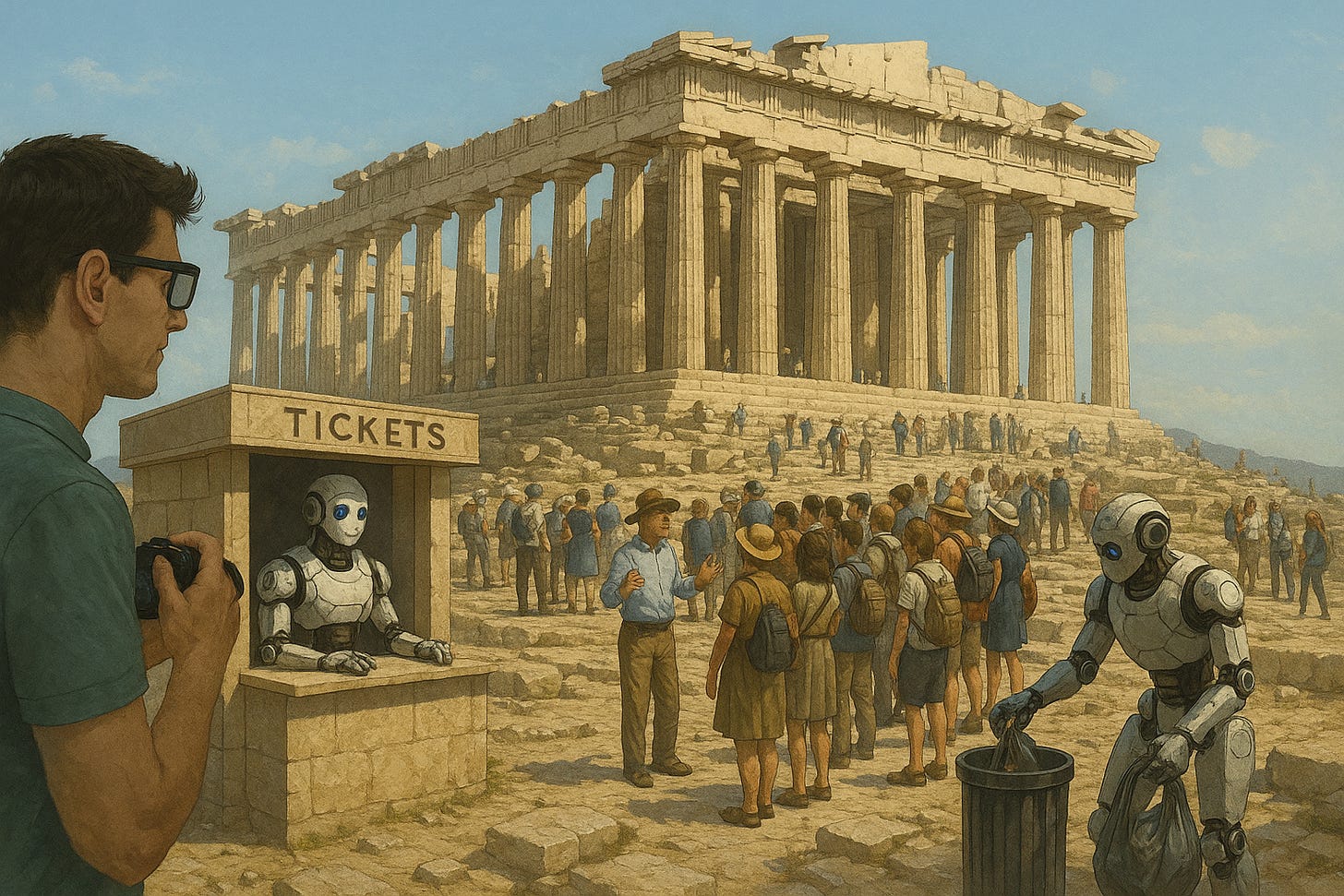

Last week I was on a tour of the Acropolis in Athens, and had the chance to grab lunch with a few other tourists I met afterwards. Naturally, the topic of work came up, and we talked for a bit about our lives, goals, and what was on the horizon. And, unsurprisingly, the topic of AI arose during the course of the conversation.

I asked the group, if given the option between a competent humanoid robot and a human tour guide, which they would prefer for giving the tour. Unanimously, the four Australians (all middle-aged and a few beers in) balked at the question.

“I might pay for a robot guide if it was much cheaper,” said one Australian.

“That’s ridiculous,” said another. “What does a robot know about history?”

And finally, another said “I just don’t know if I would care as much, or listen.”

Although older, their takes were universal. A humanoid robot as a tour guide could be a decent cheaper substitute, but it would feel akin to listening to an audio guide while walking around. More passive, less engaging, and most importantly no skin in the game.

Skin in the game matters for embodiment, and for being taken seriously. That goes for humans, non-human entities, whatever comes next. This is one of many arguments for agentic AI having access to cryptocurrency; money and the work it takes to get it are an important part of the society and culture we participate in.

But just having money isn’t it. Building a robot that can buy a burrito at the 7/11 down the street doesn’t mean it will suddenly be respected. Humanness, the human condition, and the time it takes to be a person is one aspect of the many layers that cultivate social respect. You can build robots that do the jobs of people (and even look like them), but the gap and eventual uncanny valley that will emerge as a result means that even humanoids that do a job perfectly will never be treated the same as a real human being.

The respect, or lack thereof, will be a problem for increasingly agentic systems. Businesses might be chomping at the bit to deploy agentic software to solve their problems, but when problems arise humans will be the ones responsible, not the AI.

This leads us to the central problem: we are building a world where machines will ‘know more’, but embodiment, presence, and culture are massive factors for the deployment and embrace of these systems. The growth of silicon intelligence is rapidly outpacing the speed of the physical reality humans call home, and this is going to lead to what I’m calling the asynchronous singularity.

In this piece, I will outline aspects of the asynchronous singularity, in contrast to the gentle singularity, how and why this will proceed over a longer period of time, and the huge barriers that need to be surmounted to speed up the collective pace of the human race.

Defining the Async Singularity

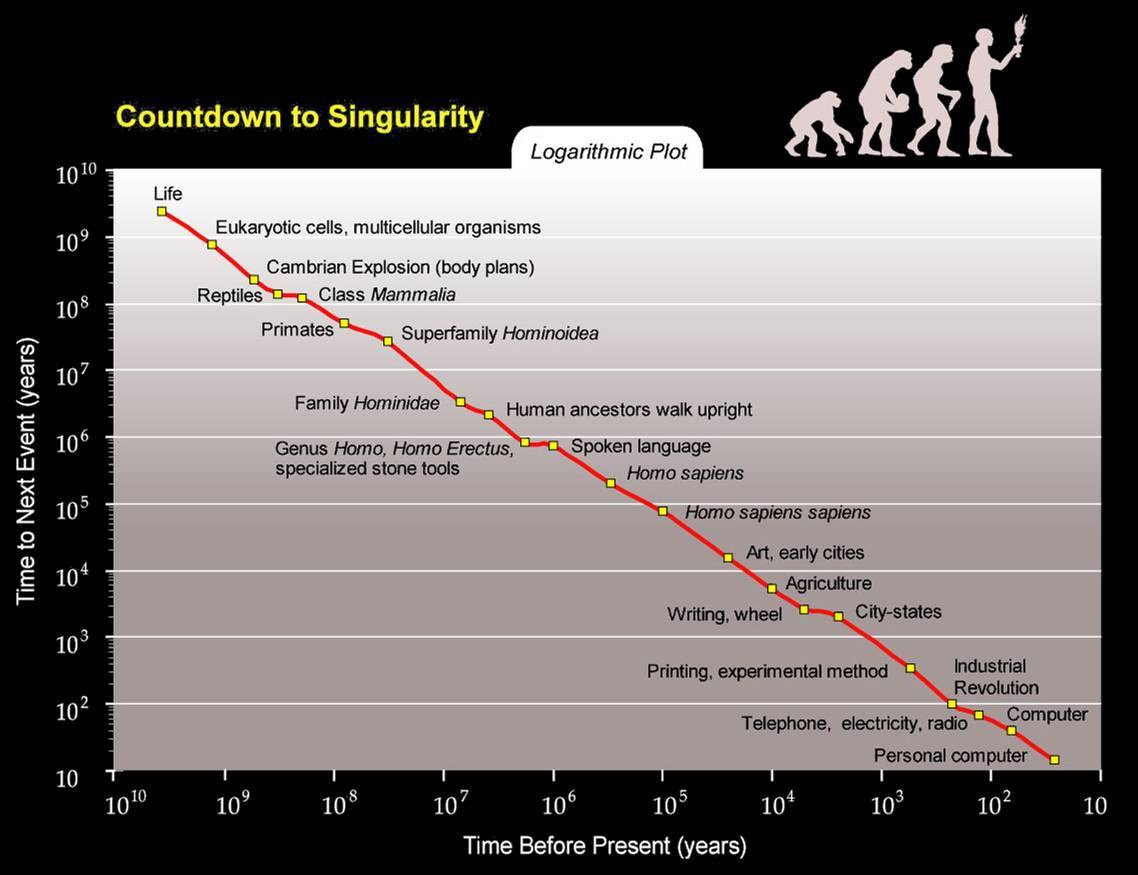

The technological singularity is a hypothetical future point where technological growth, particularly in artificial intelligence, becomes uncontrollable and irreversible, leading to unforeseeable and profound changes to human civilization.

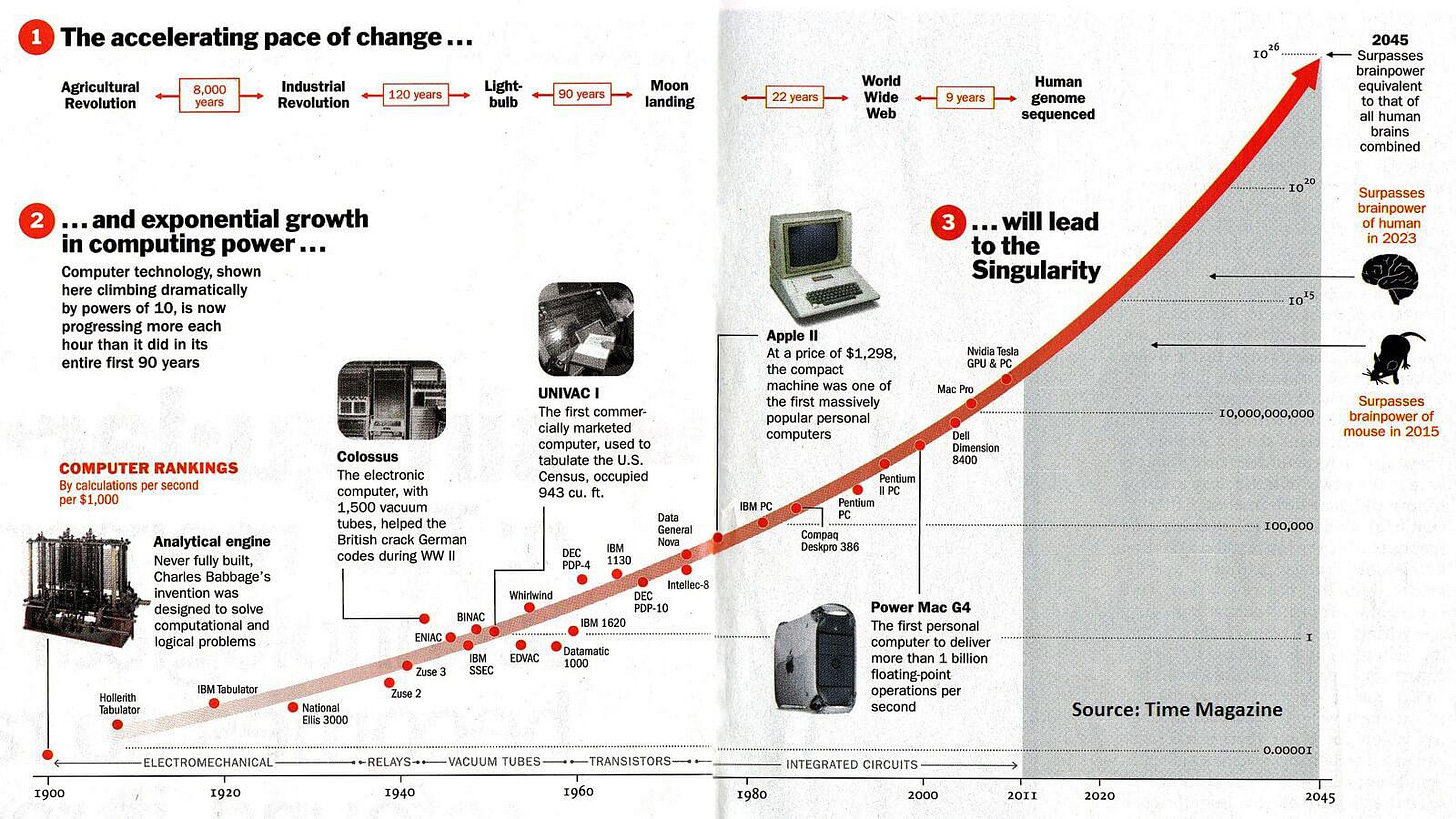

According to the Silicon Valley hivemind (and Ray Kurzweil for years) the singularity is not a matter of if, but when. We’ve heard the story many times: an upgradable agent enters a recursive self-improvement loop causing an intelligence explosion beyond human comprehension. Scenarios for this have been laid out extensively and are as compelling as they are plausible. The recursive AGI breakthrough (leading to the singularity) could happen within the next decade. What happens afterwards is largely a question mark.

When I’ve imagined ‘the singularity’ in the past, it has often involved the following scenario: The breakthrough happens, intelligence proliferates, and human life is transformed in a matter of a few short years. Nuclear fusion abounds, a century’s worth of material science breakthroughs happen in the span of a couple of weeks, and AI (a cloud or agentic swarm) is able to somehow create or manage the resources necessary to deploy or manufacture whatever it needs or wants to do, fast.

The scenario quickly gets out of control. Humans are no longer at the steering wheel – we don’t even know where the steering wheel is anymore. Through what feels like magic, we’ve created something that has seemingly endless, boundless potential, but we cannot control it. Perhaps a cleverly bioengineered pandemic is distributed quickly across the planet, killing off a majority of people living on Earth. Perhaps the superintelligence decides it wants to go all ephemeral, and transcends what we understand as materiality to spend eternity as an amorphous dark matter cloud in space. We don’t know.

While the seeming inevitability of the singularity weighs on the collective consciousness of humanity, it is not the only possible outcome. As many technologists have observed, the more progress science makes towards understanding what intelligence is, the more difficult it becomes for additional progress. Paul Allen referred to this as the complexity brake. In fact, a law of diminishing returns can be observed in numerous intellectual, social and scientific domains. The complexity brake is the norm: nascent domains and fields of study explode in development rapidly, and it feels like we’ll be ‘there’ any minute.

Here are a few examples of the complexity brake in action:

Fusion energy: Scaling and building scalable fusion reactors has been 30 years away for the last 70 years. Breakthroughs continue to stall at the scaling phase. It is always just around the corner.

Self-driving cars: Projects like Waymo and Tesla’s Autopilot are impressive given how far they’ve come, but they are not at level 5 autonomy, and they won’t be next year, either. So close, yet so far away from full autonomy.

Moore’s law: Now that transistors are approaching 2 nanometers in size, physics is getting in the way of the law. Quantum tunneling makes further progress difficult, and continuing to shrink microchip sizes costs substantially more money. More effort for less ROI.

VR headsets: Headsets like Quest 3 are very impressive, but if you had asked me in 2015 where we’d be by 2025, I’d have expected significantly more sophisticated devices. Scaling and building hardware like Apple’s Vision Pro is requiring tedious and time consuming innovations that take time to figure out. Meta’s Orion is impressive, but we are easily 6-10 years away from affordable consumer devices that have the sort of quality we can come to expect from devices like smartphones.

The pattern here is this: These things will happen, but they are happening slower than we often expect. A decade ago, Waymo had driverless cars roaming around the streets of Palo Alto. Google Glass seemed like the kind of precursor to smart glasses we’d have in 2025. We have more intelligent people and tools than ever before, so why is everything taking longer?

The asynchronous singularity is this: progress towards the development of AGI will continue, but the singularity will not be a clean launch. It will be staggered and uneven, dictated by compute, material, and social constraints.

Patchy Deployment and Cultural Adoption

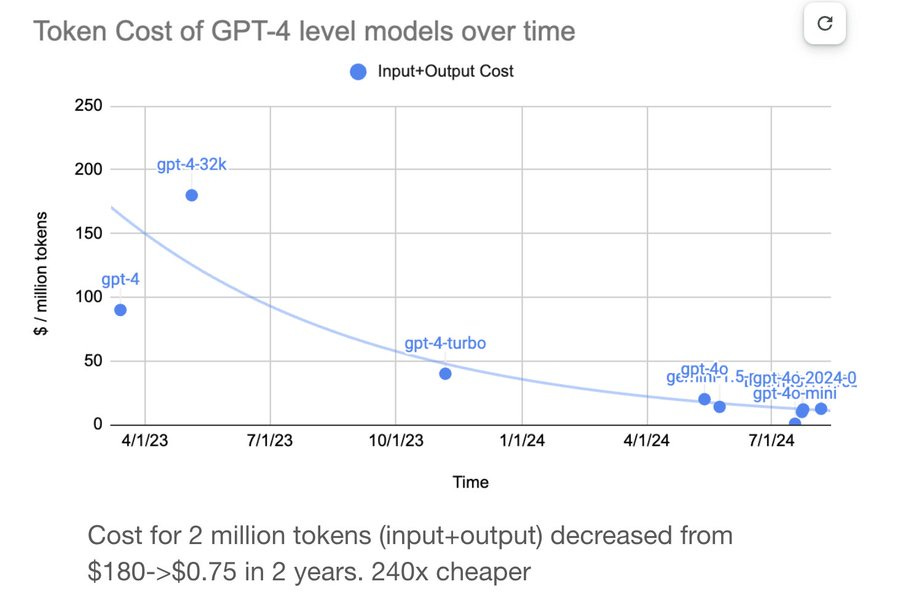

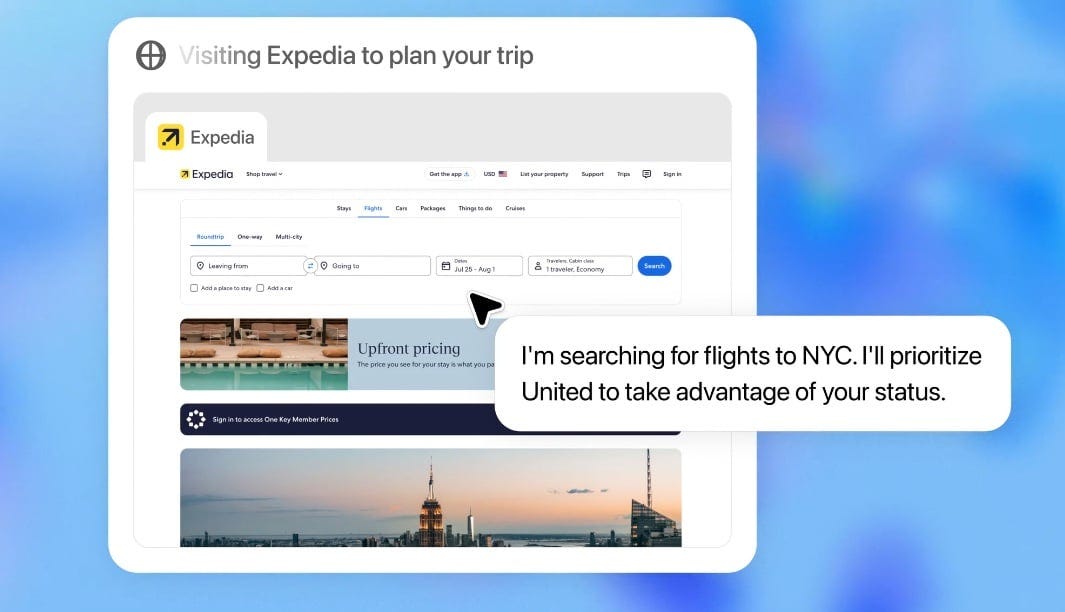

Artificial intelligence development and usage is expensive. For now, the most useful models, like 4o (which in my opinion is a great balance of multimodality and cost) are still relatively expensive to run. As multimodality increases, so does the rationalization for usage. But multimodality is expensive. Engineers in San Francisco can justify $200 dollar API usage fees for Claude Code, but they are the exception globally, and not the norm. It has taken a few years since the release of GPT-3 for the cost of regular text token usage to drop to levels accessible to the average person. This is token usage for text, not for images, video, or agentic computer use.

The cost of token usage rises dramatically with multimodal systems, in lock step with their usefulness. More efficient algorithms and scaling compute clusters will happen, but building hardware centers takes time. For now, regular multimodal usage of the smartest models the world of AI has to offer is still out of reach for most people on the planet. Things will look very different in a decade, but agentic computer use and humanoid robots will not be free.

This only speaks to if such systems are economically viable for usage, and not if they will be culturally embraced. Does a dry cleaning service in rural Greece need access to OpenAI’s latest AGI model? The existence of AGI will have no meaningful, initial impact, on huge swaths of the world. Even if the cost is nearly free, humans (until AI is embodied) must be the ones to make the changes proposed by AI. People can use AI to build better software systems, and aid in the development and manufacturing of devices that can increase efficiency, but just because you build something better doesn’t mean it will be embraced. Or even that people will care.

There’s an assumption that extraordinarily smart tools will work simply because they’re smart. That, if something is sufficiently smart, other barriers to adoption will evaporate. History has shown us that this is not the full picture. Cultural resistance and long-tail edge cases shatter this illusion of a seamless rollout. Silicon Valley may have an AGI model in the next decade that can brilliantly and agentically use a computer, but it will not be accessible or affordable for most. A minority of humans on the planet will benefit from superintelligence first, and rollout will be dictated by dollars, infrastructure, and social acceptance.

Even with all of this, we see that the interface is still the bottleneck. We are already at the threshold for our human capacity to absorb, retain, and act on intelligence. We are the bottleneck.

The Human Brain Bottleneck

If you’re anything like me, you probably have a few people in your life that have quickly and shamelessly embraced the usage of AI. You may have friends that talk to ChatGPT as a therapist, constantly generate AI images, and use LLMs at work to generate documents and act as a virtual assistant.

These people are still the exception, and not the norm. For many people, using AI is exhausting. If you ask it about a topic, the usefulness of the output is limited by your ability to absorb information. If you’re interested in a subject, you may absorb more, and if you’re bored to tears by something you may not make the effort to prompt your way to caring. We’re already at a point where AI can be used to make coursework, and we’re not too far away from video diffused courses. Perhaps in 5 years, people will be one-shotting VR software, rapidly going from idea to 3D, immersive content. We have a lot to be excited about.

Two major factors for adoption and using the intelligent output of AI remain:

Long-term memory and context of AI systems is still an issue. Feedback loops with LLMs are static, and they don’t think and learn and grow like people. Even if a UI is intuitive and agentic, the intelligence behind it still lacks the kind of long-term memory and context we might expect.

When the above problem is solved, human beings will still be limited by our minds. The incredible AGI model in 2035 might come up with an amazing solution, and we can even trust it with that solution, but implementation of problems beyond software will require a human or embodied mind to implement it.

This is the interface problem. Even once we get superintelligent AI models that act as a sort of god in the cloud, they only exist in the cloud. We can give them software access to hardware, but the physical human world remains largely out of reach. For intelligent solutions beyond human comprehension, embodiment or neural augmentation (like Neuralink) are the only paths forward.

Cloud Gods vs. Street-Level Reality

Sam Altman and Elon Musk, like many in the recursive self-improvement believers club, foresee robots building robots. Just like a Tesla gigafactory, we build a machine that builds a machine, and then that machine quickly iterates, building increasingly complicated machines that are beyond human comprehension. In theory, this makes sense: once an AGI or superintelligence exists that can learn and has superior memory, it is only a matter of time before that system is utilized to build something beyond itself.

Let me briefly define what I mean by embodiment and augmentation:

Embodiment: Robots (likely humanoid) that take up space in the physical world. They walk, talk, can lift objects, negotiate, sit in a room with people, and perform in the world like people do. An embodied intelligence may or may not have full autonomy.

Augmentation: Humans that, wishing to better understand intelligence beyond their biological constraints, modify their brains and nervous systems with BCIs. Theoretically, in the future, this might allow for superhuman abilities, like superior memory and intellect (via a tertiary layer of superintelligence).

These separate paths to solving the interface problem will happen simultaneously. As of 2025, more money is being dumped into robotics than brain-computer interfaces, and the technology behind BCI has decades to go before it can feasibly do what we’re imagining above.

However, the complexity brake for recursive self-improvement will bear its nasty teeth in the following ways:

Scaling compute: Building data centers takes time, we’re talking 3-5 years from ideation to usage.

Energy constraints: Humanity needs to 10x its energy output (at a minimum, likely more) to meet the requirements necessary for an embodied singularity. Building power plants takes time, and this assumes regulations and logistics don’t slow it down.

Raw material constraints: Even if you solve compute, you still need to acquire the raw materials necessary, refine those materials, and manufacture the chips and pieces necessary to scale whatever it is you’re attempting to deploy. There aren’t enough lithium mines to deploy humanoids on the scale Elon has proposed before 2040.

Robots building datacenters and bootstrapping factories is a dream. It may one day be a reality, not too dissimilar from scenes from The Matrix. For now, hardware is hard, logistics remain slow, and social friction occurs at every step of the way. And, even if we can build humanoid robots that can act intelligently in the world, it doesn't mean they will be embraced socially. I wrote about this a couple of months ago at length.

Every sci-fi movie ever tends to indicate that silicon intelligence acting autonomously in the world will be met with skepticism at best and social upheaval and revolt at worst.

Now suppose humanoids are built that act on superhuman intellect. Will humans understand their intentions? On some level, we won’t completely understand the efficiency gains, how they work, or why they need to happen. Try to imagine a group of humanoids coming to a city and telling the people what to do. How’s that going to play out? Ever had hesitancy to initiate a plan you didn’t write and don’t understand?

Embodiment and augmentation are necessary for realizing the potential of superintelligence. We can have ASI in the cloud, and we can certainly attempt to employ it to come up with ingenious new engineering and scientific solutions, but acting upon them beyond the box they’re devised in requires boots on the ground. If it is possible, it will be met in our lifetime with massive resistance, and may be resisted entirely by entire swaths of the human population.

So, what’s actually going to change? The implementation of superintelligence may look very different from the narrative we’ve been fed by OpenAI and on some level by the U.S. technocracy.

What Breaks and What Doesn’t

The singularity is a loaded term. The singularity is like consciousness: we constantly feel like we can define it, and it ends up being vastly more difficult to really pin down or elegantly explain.

We know that the coming of the singularity (assuming we can even agree when it happens) will bring about profound human change. AI may become uncontrollable, and the future of humanity is uncertain. It is wild to think we’re collectively racing towards building something we can neither understand nor control, but the uncertainty and the AI arms race has made this an inevitability.

In the short term, we should expect profound changes to work done on computers. Code, writing, research, and creative output for the purposes of marketing will and have already been impacted. This is already underway. Once agentic computer use becomes better and more affordable, additional job displacement within white collar work will occur. Think about the next 3 to 7 years. As many have pointed out, models still cannot truly learn, but once this problem is solved (perhaps via a successor to current LLMs) white-collar jobs will be even more at risk.

What will not change in the short-to-mid term for humanity is what is being referred to as emotional infrastructure. I wrote about this a few months ago and strongly believe this is where we are heading. Culture, family, human preferences, and symbolic professions. Human interests, loves, and fears are the biggest drivers of the world economy. Even if AI has a continual adaptation breakthrough (memory and reasoning more closely resembling a human) it remains to be seen how this will impact huge chunks of the economy. We like to believe that everything boils down to money, and that a contractor would hire a team of robots simply because they’re cheaper. But, because this scenario has never played out, we simply do not know.

This leads us to post-materialism. If intelligence is abundant, then the new scarcity in the universe becomes meaning. With additional time because of increased automation, larger chunks of the economy may turn towards art, music, and philosophy. Just as many may turn towards hedonism. We will solve many problems (breakthroughs in medicine, science, and engineering) and create new ones (existential dread and futility).

While investing in intelligence and augmentation infrastructure has become obvious, investing in human-centric roles and professions is arguably just as important for the future. There should be no doubt that people will still enjoy concerts, movies, music, and art 30 years into the future. Intelligence might become too cheap to meter, but trust and emotional integration of those tools will become more important than ever.

Moving Forward

The year is 2045, and we’re back in Athens standing at the foot of the Parthenon. The Acropolis is just as busy as ever, with tourists snapping photos with their glasses and vintage DSLRs. Among the crowd, you can see a few robots walking around – one is sitting at a kiosk selling tickets, and another is picking up trash. The tour groups look largely the same: a human guide is lecturing a group of humans about the history of this 5th century B.C. hilltop temple and about half are listening.

They tried out humanoid tour guides a couple of years ago, but people weren’t paying attention to them and teenagers kept knocking them down as a joke. The immersive Parthenon AR experience at the site is really cool, but it costs 10 extra bucks, so most people don’t even bother. The guy with the gyro cart at the foot of the hill still sells his sandwiches at 2025 prices, and it is almost lunchtime. Are you hungry?

There is a long road ahead of us as we approach the singularity. Along the way, we will encounter complexity brakes, scaling bottlenecks, and human resistance. Embodiment and augmentation will happen over the course of our lives, not in a single decade.

Humans are still the apex species on the planet.

This is the realistic singularity, the asynchronous singularity. It isn’t gentle or easy. It is a messy, convoluted, fractured landscape, with a million variables that will get in the way of the implementation of superintelligence.

Thanks for reading.

Written by a human,

- Chris

Great article. I want to hear more about "Culture, family, human preferences, and symbolic professions. Human interests, loves, and fears are the biggest drivers of the world economy."

I read this article while on a roller coaster at Epic Universal Studios in between writing Google reviews for landmarks I've never seen.