GPT-4: Living in the Exponential Curve

The biggest changes, differences, and key takeaways from OpenAI’s latest AI system

You blink, your eyelids fluttering like hummingbird wings, as the technicolor dawn of a new era washes over you. It's as if an invisible hand has turned the cosmic dial, accelerating time to the rhythm of Moore's Law, and thrusting you into a futuristic wonderland. Unfamiliar metallic voices hum around you, harmonizing with the whirring of robot companions that zip through the air like a ballet of mechanical bees. Your heart races with the thrill of the unknown, as artificial intelligence breathes life into every nook and cranny of this brave new world. The once mundane landscape has been transformed into a kaleidoscope of holographic delights, and you, with a childlike sense of awe, feel the irresistible urge to dive headfirst into the pulsating heart of tomorrow.

We are all living in the great curve of unfathomable progress. Now, the exponential growth of AI computing power (doubling every 3.4 months) exceeds Moore’s Law (which doubles every 2 years). Life is becoming more and more like a Philip K. Dick novel, and somehow your old phone you haven’t used in years still turns on and has a charge. Time on the physical plane is passing slowly, but the information superhighway is entering warp 9.

Here Comes the Cowboy

OpenAI has officially unveiled ChatGPT-4, marking a significant milestone in the realm of AI language technology. Described as the "most advanced system" by OpenAI, ChatGPT-4 promises safer and more useful responses, addressing the long-standing rumors of its groundbreaking enhancements. This advanced model is designed to be more creative, collaborative, and accurate in solving complex problems. GPT-4 has already attracted interest from major companies, such as Duolingo, Stripe, and Khan Academy, who are eager to integrate its capabilities into their products and services.

The announcement was made on March 13, with Microsoft confirming its availability in the ChatGPT Plus paid subscription. However, the free version of ChatGPT will continue to utilize GPT-3.5.

Integrations and Demonstrations

Developers can access GPT-4 through an API to build applications and services. Notable companies, such as Duolingo, Be My Eyes, Stripe, and Khan Academy, have already integrated GPT-4 into their platforms. A public demonstration of GPT-4 was live-streamed on YouTube, showcasing its new capabilities.

Microsoft's Bing chatbot is also powered by GPT-4, and developers can access it through an API to build their own applications. The waitlist for the API is currently open, and OpenAI plans to admit users shortly.

If you’re interested in using the API, signup for the waitlist here: https://openai.com/waitlist/gpt-4-api

There’s so much to dive into here. Broadly speaking, the demonstrations are a clear leap in progress from ChatGPT 3.5. People online are testing out GPT-4 and finding that it is able to summarize, compose, and in general generate content more accurately and with greater creativity and bravado.

Some of the most interesting demonstrations of the technology today have been the following:

Searching for credit card transaction data and transforming into JSON

Creating a “one click lawsuit” program using GPT-4 to sue robocallers.

It is clear that while GPT-4 is probably weakest at coding, it is still incredibly powerful as a tool for programming. As Pietro mentions later in the thread, this was the first time he’s gotten a program created by a GPT to work in a single try. That was previously unthinkable with 3.5. So much more is going to be tested and mentioned on Twitter in the coming days, so I’m sure by the time you read this other incredible tests will go viral.

What's New with GPT-4?

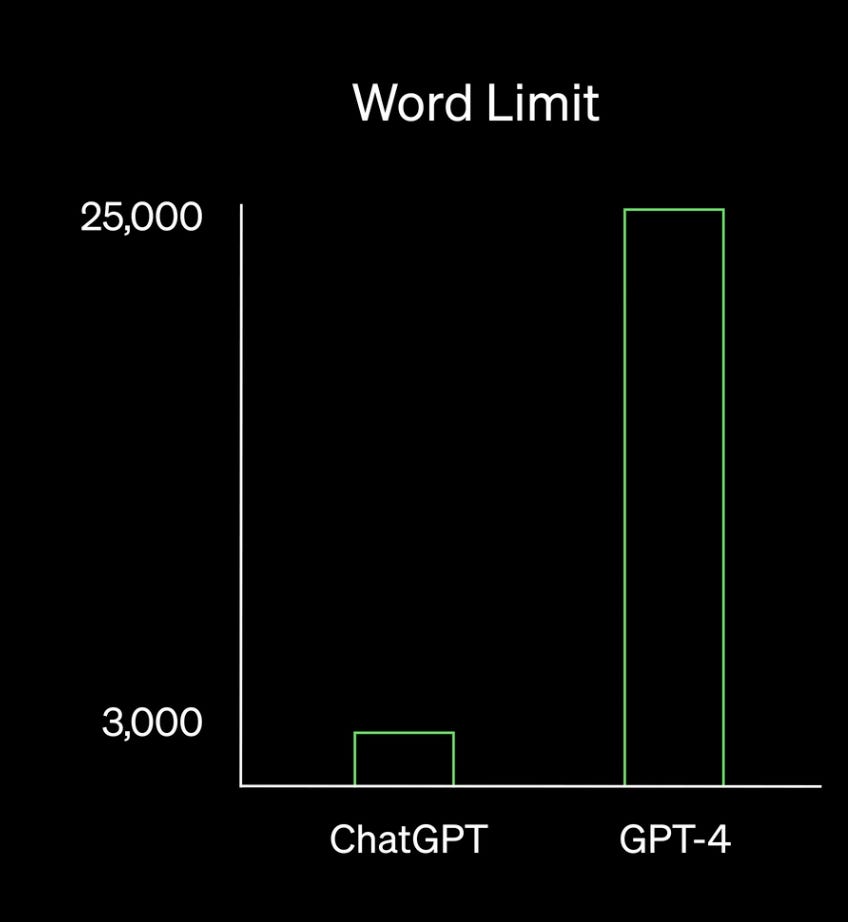

GPT-4 exhibits advancements in three key areas: creativity, visual input, and longer context/content. With improved creative collaboration, GPT-4 can generate music, screenplays, technical writing, and even adapt to a user's writing style. The extended context allows GPT-4 to process up to 25,000 words, interact with web-linked content, and engage in extended conversations. For context, George Orwell’s Animal Farm, a well known novella, is 29,966 words. We’re rapidly approaching a point where these models can process an entire (small) book in one prompt.

A Note On Exam Benchmarking

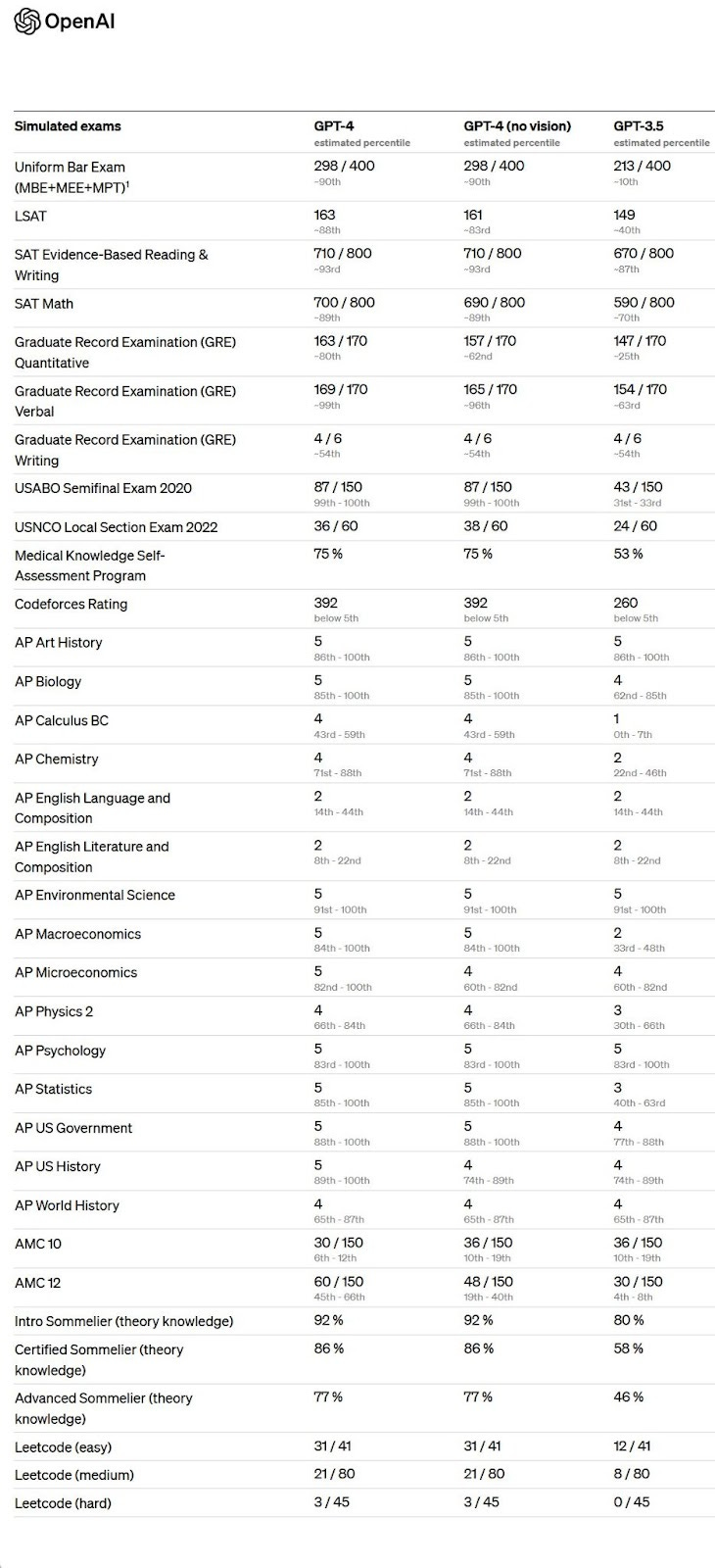

GPT-4's enhancements are evident in its performance on various tests and benchmarks. For instance, it has scored in the 88th percentile and above on exams like the Uniform Bar Exam, LSAT, SAT Math, and SAT Evidence-Based Reading & Writing. It passes almost every test it takes, and the only notable instances where it fails are advanced sommelier theory tests and any Leetcode that isn’t that difficult. Programmers, you’ve still got your jobs at least for the next year.

If you check out the appendix for the GPT-4 technical report, you’ll find a lengthy explanation of their exam benchmarking methodology, along with how these tests were sourced, run, scored, and how questions were at times reappropriated to be posed and answered by the model.

If you end up diving into the report, you’ll notice the term RLHF. This is an important concept within the field of machine learning.

Reinforcement Learning from Human Preferences (RLHF) is an advanced reinforcement learning approach that trains artificial agents using human feedback on their behavior. By incorporating subjective human judgments, RLHF can effectively capture complex objectives that are challenging to represent with traditional reward functions. This technique offers improved performance and reduced risk of unintended consequences in various applications, as it better aligns with real-world problems and human values.

Adding vision capabilities certainly helps with performance, especially on graphically heavy tests, as shown by the AP Microeconomics performance.

Why Multimodal Models Matter

GPT-4 is a multimodal system that can process both text and image inputs, allowing it to interpret more complex input types. This ability enables GPT-4 to generate meaningful text responses based on a combination of visual and textual data, expanding its potential applications.

Eventually, one can imagine that this system won’t be limited to just images on the internet, and you’ll be able to also upload anything and have it analyzed.

(This went viral a couple of hours after GPT4 access was available (from the technical paper))

But why is this such a game changer? What else can a multimodal model provide that past iterations couldn’t?

Here’s a list of what multimodal GPT systems can do, and will eventually be able to do:

Image captioning: MM-GPTs can generate natural language descriptions of images, which can be useful for accessibility applications, such as aiding visually impaired users in understanding the content of an image.

Visual question answering: MM-GPTs can answer questions about images by interpreting the visual content and generating text. This can be useful in various applications, from customer support to education. Text-only models would require manual or separate image processing to achieve a similar result.

Image synthesis from textual descriptions: MM-GPTs will eventually be able to generate images based on text descriptions, enabling applications like art generation, design, and virtual reality. Text-based systems are unable to generate visual content directly, which limits their creative potential.

ChatGPT currently cannot do this, but it’s entirely possible OpenAI will integrate image diffusion models like DALL-E 2 into ChatGPT.

Audio-visual speech recognition: MM-GPTs can process both audio and visual input, allowing them to perform more accurate speech recognition in noisy environments by incorporating lip-reading information. Text-only systems rely solely on audio input, which can result in poorer performance in challenging conditions.

ChatGPT currently cannot do this, but OpenAI has been experimenting with audio for a while. The Jukebox neural net that generates music is worth checking out here.

Video summarization: MM-GPTs can analyze and understand video content, generating text-based summaries of the key events or themes. This can be useful for news, sports, or entertainment applications. Text-based systems cannot process video data and would require manual video analysis or a separate processing pipeline.

ChatGPT currently cannot do this, but one might imagine a premium service where you upload video content and have it analyze and spit out summaries or other useful information. Perhaps we’ll see that later this year.

Enhanced Safety Measures

OpenAI has made GPT-4 safer than its predecessors, with 40% more factual responses and an 82% reduction in responding to disallowed content. These strides were achieved through human feedback and collaboration with over 50 experts in AI safety and security.

The effort for this shows. The GPT-4 technical report is lengthy, but there are a ton of knowledge (chicken) nuggets that showcase the amount of effort the team put into training this new model.

OpenAI knows that language models can often amplify biases and perpetuate stereotypes, and this is due in large part to the data sets these systems are trained on. According to the technical paper, the model prior to being trained “has the potential to reinforce and reproduce specific biases and worldviews, including harmful stereotypical and demeaning associations for certain marginalized groups. A form of bias harm also stems from inappropriate hedging behavior. For example, some versions of the model tended to hedge in response to questions about whether women should be allowed to vote.”

Seeing the responses GPT-4 gave prior to being completely trained is an indicator for how truly open-sourced GPT models may respond once they’re unleashed and used en-masse in the wild. For some context, over the span of just a couple of weeks, the LLaMA (Meta’s large language model) leaked on BitTorrent, and then a developer by the name of Georgi Gerganov created an app allowing it to run (albeit slowly) on an M1 Mac. Subsequent releases got Georgi’s creation to work on a Raspberry Pi 4, and even a Pixel 6 phone.

Simon Willison’s blog has a trove of information about this specific subject here.

We will begin to see in the coming months various sized models running on people’s home computers. Eventually, the models people will be running independently will resemble something akin to the proliferation of image diffusion models we saw released into the wild in the summer and autumn of 2022.

Limitations

Despite its impressive capabilities, GPT-4 still has some limitations. Like previous language models, it can generate false or harmful content, also known as "hallucinations." OpenAI has implemented safety training to mitigate these issues, but the risk remains.

As GPT-4 becomes more widely adopted across industries, its limitations have sparked concerns about its potential misuse or negative impact. For instance, the education sector is grappling with AI-generated essays, while online platforms like Stack Overflow and Clarkesworld have had to close submissions due to an influx of AI-created content. Nevertheless, some argue that the harmful effects of GPT-4 have been less severe than anticipated, and the focus should remain on harnessing its potential for the greater good.

As Sam Altman has repeatedly emphasized, no, this isn’t AGI, and it won’t be AGI even if they add another 100 trillion parameters. GPT-4 is a stepping stone towards future systems that might resemble AGI, but we’re not entirely sure what that will look like yet. This is a big step, especially when compared to ChatGPT 3.5, but the abilities of GPT4 shine when the model is tasked with something humans might consider more cognitively demanding.

TL;DR Takeaways

OpenAI has unveiled ChatGPT-4, a significant advancement in AI language technology.

The advanced model promises safer and more useful responses, enhanced creativity, and problem-solving capabilities.

Major companies like Duolingo, Stripe, and Khan Academy have shown interest in integrating GPT-4 into their platforms and will likely do so.

ChatGPT utilizing GPT-4 is available through a paid subscription, while the free version continues to utilize GPT-3.5.

GPT-4 demonstrates advancements in creativity, visual input, and longer context/content processing.

The model has performed well on various benchmarks and exams, showcasing its enhanced capabilities.

GPT-4 is a multimodal system that can process both text and image inputs, expanding its potential applications.

OpenAI has made GPT-4 safer through human feedback and collaboration with experts in AI safety and security.

Despite its impressive capabilities, GPT-4 still has limitations, including generating false or harmful content.

GPT-4's widespread adoption raises concerns about potential misuse and negative impact, but many argue that its benefits outweigh the risks.

OpenAI's ChatGPT-4 marks a significant milestone in AI, offering safer and more useful responses, enhanced creativity, and problem-solving capabilities. As a multimodal system, it can process both text and image inputs, expanding its potential applications across various industries. Despite its limitations, such as generating false or harmful content, GPT-4 has garnered interest from major companies eager to harness its potential for the greater good. As AI technology continues to advance, it will be crucial for researchers, developers, and users alike to address potential concerns and focus on leveraging these powerful tools for positive impact.

As you close your eyes, weary from a day immersed in the pulsating heart of tomorrow, you drift into the realm of dreams. The rhythm of Moore's Law, which had quickened the pace of the waking world, now ushers you into a realm of slumber where electric sheep gambol playfully across the velvety skies. Their metallic bodies, bathed in the soft glow of technicolor moonlight, dance with the grace of an algorithmic ballet, an homage to the visionary works that dared to explore the depths of human experience and the ever-evolving relationship between humanity and artificial intelligence. As you surrender to the embrace of this fantastical dreamscape, the once mundane boundaries of reality blur, leaving you to ponder the true nature of existence in a world forever changed by the relentless march of progress.

AI is here and I am writing about it also, as a way to educate myself and learn. Check it out - nice breakdown here.