DolphinGemma and the Quest for Local Universal Translation

How Google’s DolphinGemma points to an on-device, real-time translation future

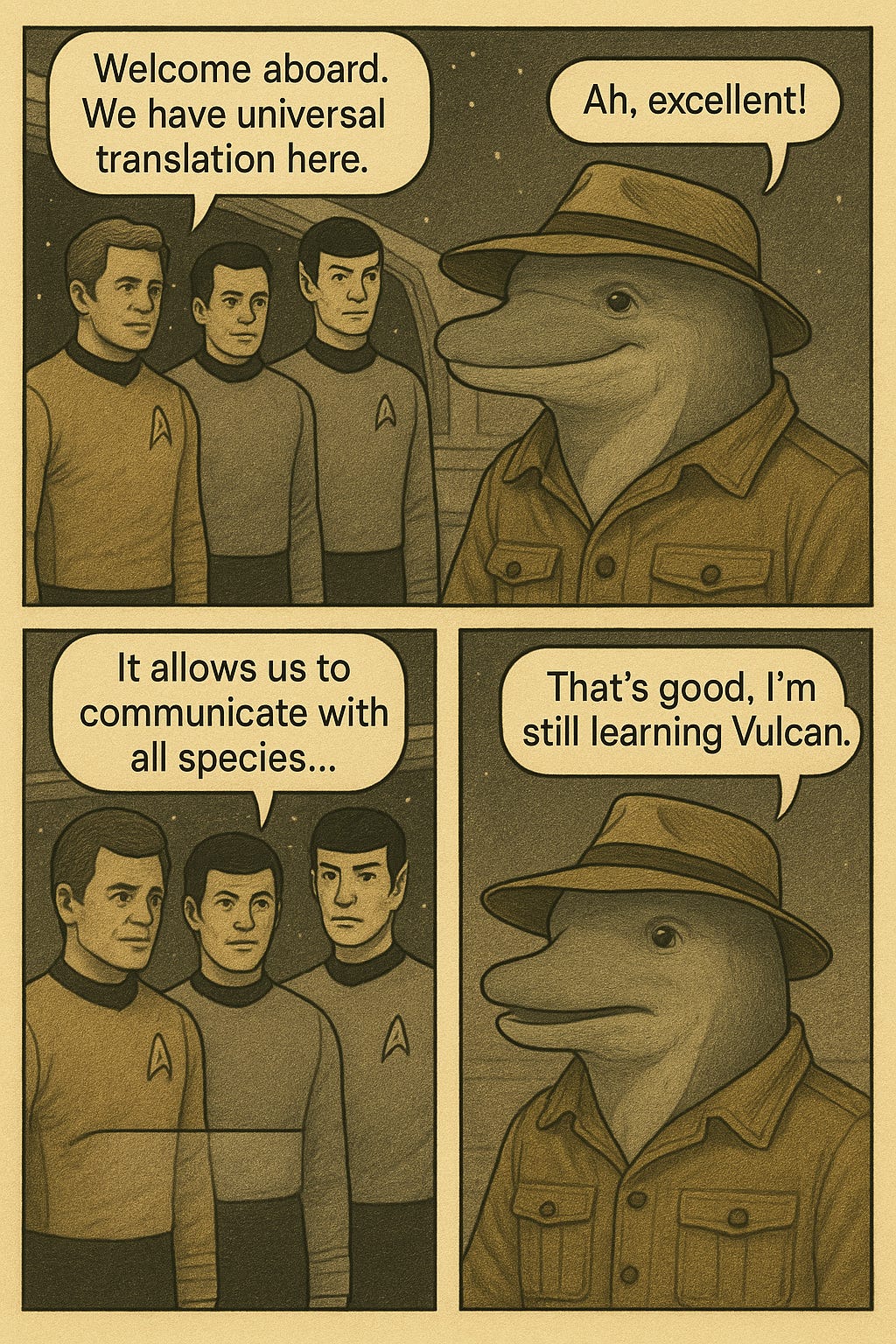

In the now famous Star Trek original series episode, ‘Who Mourns for Adonais’, the Enterprise crew encounters Apollo, a god-like being from ancient mythology. It is in this episode that Captain Kirk initially describes the function of the universal translator: a device used by Starfleet built into the communication systems of most of its starships. Eventually, throughout the series, universal translators advance to a point where UTs could be built into combadges worn by the crew, enabling instant, natural, and seamless communication.

When ‘Who Mourns for Adonais’ was written 57 years ago, the idea of a pocketable, universal translation computer was truly science fiction. Today, the reality of such a device isn’t just a fantasy, it is quite nearly a reality.

We’re going to explore what DolphinGemma is, small language models, and how it relates to this idea of a universal, pocketable, local translator. As it turns out, academic research into how dolphins speak is strongly related to Apple’s push for having Airpod Pro 3s support instant translation.

What is DolphinGemma?

Over the last week, the internet blew up with the news that Google was working with the Georgia Institute of Technology, alongside the Wild Dolphin Project (WDP), to release a Gemma-series AI model trained to learn the structure of dolphin sounds. The goal is to uncover the structure and meaning of dolphin sounds, and figure out if dolphins have a shared language.

For decades, despite studying dolphins and gathering countless clips of their signature whistles, squawks, and buzzes, we can’t figure out what they’re saying. We know they have names for each other, and we can classify certain sounds as being associated with encounters or activities, but that’s about it. There’s no Rosetta Stone to be dug up, and the best linguists in the world are utterly lost. Time to try AI.

Luckily for Google, the WDP had already labeled much of the training data that would be necessary to train DolphinGemma. DolphinGemma is built from Google’s Gemma architecture. In a nutshell, that means the model is lightweight, able to be run on a small device, open, and multi-modal. It runs on a phone, and utilizes Soundstream, an audio codec used to compress audio to reduce storage. Essentially, raw audio waveforms are transformed into lower-dimensional embeddings (compressed numerical representations). Soundstream converts the audio signals into tokens, exactly like how small language models (SLMs) will be used in the future for real-time translation on phones.

The process for this is very cool:

Data Collection & Annotation: Researchers from the WDP have gathered extensive underwater audio and video recordings of Atlantic spotted dolphins since 1985. Each recording is meticulously annotated with behavioral contexts, such as mother-calf reunions, social interactions, and hunting behaviors, providing a rich dataset for analysis.

Audio Tokenization with SoundStream: The captured dolphin vocalizations are processed using Google's SoundStream, a neural audio codec that compresses and tokenizes the audio into discrete units suitable for analysis.

Pattern Analysis with DolphinGemma: The tokenized sequences are analyzed by DolphinGemma, a lightweight model with approximately 400 million parameters, to identify patterns and structures within dolphin communication.

Real-Time Deployment: DolphinGemma runs efficiently on devices like Google Pixel smartphones used by researchers in the field, enabling real-time analysis of dolphin sounds.

Interactive Communication via CHAT System: In conjunction with the Cetacean Hearing Augmentation Telemetry (CHAT) system, synthetic whistles associated with specific objects are introduced. Dolphins are encouraged to mimic these whistles to request items, facilitating a form of two-way communication. This may lead us to uncovering their language.

The Pixel phone functions as the brain of the operation underwater, which can run the CHAT system, deep learning models, and a template matching algorithm. With Gemma architecture, what was formerly impossible (real-time communication with dolphins) has become plausible. The Pixel replaces bulkier hardware, and breakthroughs with quantized, fine-tuned language models means more is possible with less compute and RAM.

We don’t know what dolphins are saying yet, but this magic is possible because of open, compact models. Right now, so much time and attention has been focused on frontier models like o3, but the future is arguably just as exciting for the use cases made possible by small language models.

So, what makes small language models different? Why should you care? Is everyone going to be able to understand every language they hear in person using Airpods soon?

Let’s break down the key advances in small language models, like Gemma, to better understand where things are heading.

Small Language Models

The industry has advanced quickly since early 2023. Google initially dropped Gemma 2 in mid-2024, and it quickly made waves in the space for being lightweight, accurate, and highly performant. Just recently, Google released Gemma 3, which has a much larger token context window, comes in multiple sizes, and is multimodal. Out of the box, for free, Gemma 3 supports over 140 languages! You can download it onto your phone and have it run locally. If your phone is slightly more powerful, you can even have the 4b model run locally on your phone, capable of analyzing images, summarizing documents, and more. The pretraining dataset for Gemma included more languages, doubling the amount from Gemma 2. Google has a Gemma 3 training report that dives into how the models were trained and instruction fine-tuned.

Like many advances with technology, Gemma’s efficiency is the result of a number of innovations with training techniques, the utilization of memory, and selective neural routing.

From advances with LoRA to speeding up attention on GPUs through minimizing memory reads and writes, the efficiency gains add up. Now, you can reliably run Gemma 3 on your phone locally, which is more performant and intelligent than GPT-3 was when it was released in late 2022. All for free.

Key advances for reducing the size of language models. Not all of these advances are used by Gemma 3, but each is worth learning about.

We’re clearly trending towards the following:

Models: Highly performant, open, small language models that are increasingly accurate, knowledgeable, and wicked fast out the box

Hardware: TPUs (tensor processing units), NPUs (neural processing units) and AI-centric (ASIC) chips that are standard with flagship smartphones. Soon, all phones

Software: Further advances in compressing model weights, optimizing memory, selective routing, and optimization for utilizing GPU/TPU/NPU memory

The barrier for offline and accessible intelligence is shrinking. Real-time translation is almost coming as a side-effect of these advances. The current iteration of Google Translate, the most widely used translation app, utilizes LLMs, but more specifically transformer-based neural machine translation (NMT). NMT models are specifically designed for translation and are more compact, making them ideal for on-device usage. The breakthroughs in language models and transformer architecture explain why in 2024, Google was able to double its language support for 110 new languages in the app (made possible in part by their PaLM 2 model).

AirPods Pro 3: It’s All Coming Together

Apple made waves last month by announcing that the latest Airpods would feature the ability to translate in-person conversations from one language to another. Just imagining the ability to shrink the gap in understanding and increasing human connection is so genuinely exciting. Make no mistake, the first iteration of this will probably be underwhelming, and if you’ve ever used Google Translate with another person to have a real-time conversation, you might recognize just how challenging and frustrating this can be. Currently, unless you have a lot of patience, Google Translate is slow and cumbersome at best for back and forth, real-time conversational translation. It works, but the experience is lacking.

So much of this is speculative. We have zero info on how this latest feature from Apple will work, what models will be utilized, and what the hardware constraints are. Apple is planning to improve the translate app in iOS 19, and hopefully that means older models will get the update as well. But if I had to guess, the local model will utilize much of what we discussed above, and because Gemma is open, Apple might even utilize it.

What Comes Next?

Unless you’ve been closely following the developments with SLMs and the progress Google has made recently with translation, it might be easy to overlook just how far we have come. In a short period of time humanity has rapidly improved the speed at which we can translate languages, lowering the cost, and removing the barriers of even having internet access. While current models are not perfect, the trend is clear. Within a couple of years, it is entirely conceivable that most of us will be carrying around devices capable of near real time translation. Even today, you can freely download Gemma models and use them on your phone, privately and locally.

Humans are notoriously bad at predicting the true rate of technological progress. When ‘Who Mourns for Adonais’ was written in 1967, the writers placed the plot 200 years into the future, in 2267. In less than a single human lifetime, we’ve gone from fictional writing about unbelievable technology to something we’re very close to perfecting. We’re seeing how performance gains using language models are having a real impact in scientific research, getting us closer to being able to communicate the latest memes to dolphins.

One day, soon, we will all have a universal translator in our pocket. Communication barriers will shrink. We will be more connected. And, this technology will be increasingly accessible to all. Efficiency gains with language models will change how we travel, work, and interact across cultures, making everyday life even better.

Thanks for reading.

- Chris

Interesting to reflect on not only the pace of advancement but the specific areas where we’re seeing it manifest. Fascinated to learn more about how they’re working to uncover a dolphin language and its synergy with UTs, very cool stuff!