Decentralized AGI and the AI Revolution: The Intersection of Data Privacy and Humanity's Future

Where we're at, and where we're going as we approach the singularity.

The past decade has been a transformative era for artificial intelligence. With the advent of more powerful processing, we've seen an explosion in AI capabilities reaching into every corner of our modern lives. From innocuous chatbots to controversial surveillance systems, AI's pervasive presence raises pressing questions about data privacy and ethical use of technology.

In an enlightening discussion at Consensus 2023, Edward Snowden, former U.S. defense contractor turned activist, and Ben Goertzel, CEO of SingularityNET, lend their insights on the intersection of AI and privacy. Moderated by David Morris, this conversation traversed topics of personal data, privacy rights, AI capabilities, government intervention, and the future of AI safety.

In this article, I’m going to touch on and expound upon what was discussed during this talk, and provide more context and details surrounding the goals of SingularityNET, why approaching artificial general intelligence (AGI) from a decentralized perspective is important, and where we think the future of data privacy is heading.

AI Surveillance and the Erosion of Privacy

In an era marked by pervasive surveillance and indiscriminate data collection, personal privacy is fast receding into obscurity. The dialogue between Snowden and Goertze brings to the forefront unsettling implications of AI's ever-expanding capabilities, especially as they relate to massive reserves of personal data amassed by government agencies and private corporations.

The internet, once a vast frontier of free exploration and exchange, has become an intricate web of surveillance, where our every digital footprint is tracked, recorded, and stored. Snowden illustrates this with a stark clarity: every online action, every digital interaction, regardless of our awareness or intent, feeds into databases of unimaginable scope and influence. In Snowden's view, this ceaseless vacuuming of data, indiscriminate of our innocence or guilt, represents a flagrant transgression of fundamental human rights.

This pattern of surveillance has shown little change, even in the face of revelations such as Snowden's 2013 leaks or subsequent legal challenges. For instance, in 2018, the U.S. Supreme Court ruled in Carpenter v. United States that law enforcement agencies needed a warrant to access cell phone location data. Despite this, reports continue to emerge on law enforcement's use of invasive technologies, like facial recognition software and geofencing warrants, effectively bypassing traditional legal barriers to surveillance.

At the same time, government entities, for all their vast data repositories, seem to lack the nimbleness and finesse in leveraging these resources that private tech corporations like Google, Facebook, and Amazon exhibit. These tech giants have engineered sophisticated AI systems, often based on vast GPT-like models, capable of handling immense data sets and extracting valuable insights. Consequently, these entities hold a significant edge over government agencies, both in terms of data processing capacity and speed, effectively pushing the AI field towards increasingly data-intensive and computation-heavy models.

This trend towards centralization isn't merely an academic concern. It carries significant economic and societal implications. As more and more of the world's data and computational resources become concentrated in the hands of a few corporations, the balance of power shifts accordingly. This imbalance presents a significant challenge to equality, freedom, and privacy.

Taking a recent example, a 2021 investigative report revealed that Clearview AI, a tech firm, has scraped billions of images from social media platforms, creating a facial recognition database used by hundreds of law enforcement agencies. These instances reflect a growing erosion of privacy, as corporations and government entities, aided by advanced AI, dissect and analyze our data on an unprecedented scale.

In the talk, Ben focused a bit on some of the ethical points made in the 1998 book by David Brin entitled ‘The Transparent Society’. Let’s dig into this a bit, because it is still just as relevant today as it was 25 years ago.

The Transparent Society

In The Transparent Society, Brin posits that core privacy might be preserved despite increasing surveillance technology. The points he touches on are absolutely relevant to the ethical concerns surrounding the increased utilization of AI in surveillance.

Privacy is Dependent on Freedom: Privacy is not an absolute right, but a contingent one. It derives from primary rights like the right to know and the freedom to speak. In any society, the preservation of privacy depends upon the assurance of these fundamental freedoms.

Balancing Knowing and Privacy: An open society requires a continuous negotiation of trade-offs between the need to know, or transparency, and the desire for privacy. This negotiation is not a one-time process but an ongoing dynamic that must adapt as technology and society evolve.

Sousveillance or "Viewing from Below": In a transparent society, surveillance powers are not the sole domain of governments or corporations but are shared with citizens. This results in "sousveillance", where the public, rather than authoritative bodies, records activities, typically via wearable or portable personal technologies.

Aligning with Enlightenment Principles: This sharing of surveillance powers resonates with the principles of prominent western enlightenment figures like Adam Smith and John Locke. By democratizing knowledge and its accessibility, we can constrain elite power and promote a more equitable society.

The erosion of privacy isn't just a theoretical problem – it's a tangible issue unfolding in real-time, shaping the societal fabric and our collective future. Because the emergence of AGI is now viewed as inevitable, we instead need to focus our attention on bringing to fruition decentralized systems that can work for the good of everyone.

Let’s turn our attention now to how decentralized systems might help us avoid some of the more dystopian elements of centralized AGI.

The Power Concentration in AI and the Potential of SingularityNET's Decentralization

AI's centralization, which positions a select group of tech behemoths and governmental entities as key players, is a significant impediment to the fair distribution of AI's benefits. This privileged group, leveraging their vast data resources and unmatched computational power, maintain an unrivaled standing. Amidst this status quo, Goertzel brings forward the transformative potential of decentralized AGI with SingularityNET, exemplifying this paradigm shift.

AI's centralization transcends merely owning extensive data and computational resources; it carries profound implications of influence, control, and power. The concentrated power within a limited group has led to monopolistic behaviors and repeated instances of data privacy infringements. In response to the status quo, Goertzel champions a decentralized AGI model. Constructed within a transparent, blockchain-powered ecosystem, SingularityNET seeks to protect individuals' data sovereignty, safeguarding against misuse and fostering equitable access to AI's advantages. The platform aims to democratize access to AI technology, creating a marketplace where anyone can develop, share, or monetize AI services.

SingularityNET operates on the Ethereum and Cardano blockchains, allowing developers to publish their AI services for universal access. It also utilizes its native token, AGIX, to enable transactions within the platform, linking the spheres of AI and cryptocurrency. By integrating these technologies, SingularityNET encourages a collaborative space where developers can learn from each other to build superior AI systems.

Currently, SingularityNET hosts over 70 AI services, including multilingual speech translators, real-time voice cloning, speech command recognition, and neural image generation. Regardless of a user's technical expertise, the platform's user-friendly interface makes these services accessible to all, with demo functionalities for feature testing before a complete system purchase.

One of SingularityNET's distinguishing features is its use of smart contracts, ensuring a fair environment for all stakeholders. These contracts regulate the exchange process when users want access to a developer's creation, with developers able to set usage parameters like duration or task specificity. This autonomy aligns with SingularityNET's decentralized ethos, with no intermediary controlling access or pricing, which is enforced automatically.

AGIX facilitates marketplace transactions, enables voting on governance proposals, and supports liquidity boosting via staking. Initially bound to a single blockchain, AGIX has evolved to feature multi-chain compatibility, expanding its utility across Cardano, Polygon, and Binance Smart Chain.

SingularityNET's long-term vision centers around fostering a mutually beneficial environment for developers and users, accelerating AI's evolution. The ultimate goal is to develop an AGI system capable of broad-ranging tasks and self-improvement without human input, much like human cognitive abilities. While realizing this comprehensive AGI system might be years away due to the technological and ethical challenges, SingularityNET aims to conquer these hurdles through its holistic learning environment.

Future Directions and the Need for Proactive Legislation

The unchecked proprietary interests in the world of AI often lead to closed-models where select few organizations or corporations tightly hold AI technology, limiting its accessibility and the public's ability to understand or participate in its development and implementation.

Real-world instances of this issue are becoming more common. OpenAI has shifted from its initial position of broad openness to a more closed, proprietary stance in recent years. This shift underscores the ongoing issue - significant advancements in AI technology risk being cloistered away behind corporate firewalls, out of reach of public benefit and scrutiny.

Imagine a future where governments could potentially leverage their surveillance capacities for the public good rather than against it. This concept might seem counterintuitive given the current concerns around governmental surveillance. However, they suggest that in the fast-moving landscape of AI, governments - while often seen as slow to adapt to technology - may have the potential to rapidly catch up and play a crucial role in shaping the future of AI. Here, the government could serve as a steward, ensuring that AI benefits all of society, not just a select few. The more we talk about AGI, the more the conversation naturally gravitates towards what the future of universal basic income (UBI) will look like in the United States.

Models like ChatGPT show clear limitations (right now) in areas such as creativity and factuality. Nevertheless, the ongoing progress in this field suggests a future where AI can think, reason, and write source code, marking a significant step towards the realm of superintelligence. This possible future prompts us to ask a fundamental question: How do we ensure that this immense power is used for the greater good and is not monopolized by a select few?

Given the weight of these issues, it is clear that the future of AI requires diligent oversight, clear ethical guidelines, and proactive legislation. As we consider future directions for AI, we must also examine how different models of AI governance, such as the closed model exemplified by OpenAI and a potential future decentralized model, approach crucial aspects like privacy and safety.

Let’s delve into the approach towards privacy and safety taken by OpenAI, as a representative of the closed, proprietary AI model. We will then contrast this with how these crucial aspects might be handled in a decentralized cryptographic future where AGI is owned broadly by the public rather than individual corporations. This comparison will provide a nuanced understanding of how different models of AI governance may shape our shared digital future.

OpenAI's Approach to Privacy and Safety

Building Increasingly Safe AI Systems: OpenAI conducts testing and external expert review prior to any system release. They improve model behavior with techniques like reinforcement learning (RLHF) and monitor their AI systems' safety.

Learning from Real-world Use to Improve Safeguards: OpenAI uses real-world interactions with their AI to continually enhance safety mechanisms.

Protecting Children: OpenAI imposes age restrictions on the usage of their AI tools and actively works to minimize potential harmful content towards children.

Respecting Privacy: OpenAI ensures privacy by removing personal information from their training datasets and working towards models that don't generate responses containing personal information.

Improving Factual Accuracy: OpenAI is dedicated to enhancing the factual accuracy of their AI models, recognizing the importance of accurate output.

Continued Research and Engagement: OpenAI encourages collaboration and dialogue, advocating for AI safety and capabilities to go hand in hand.

A Decentralized Approach to Privacy and Safety

Contrast the above with the below.

Building Increasingly Safe AI Systems: Decentralized AI safety could be community-driven, enforced via consensus mechanisms, smart contracts, and community audits to ensure collective accountability.

Learning from Real-world Use to Improve Safeguards: Safety improvements could come organically and dynamically from a global community of users, potentially leading to more diverse and inclusive safety measures.

Protecting Children: Community-agreed policies could protect vulnerable groups in a decentralized AGI, possibly employing blockchain-based identity verification and age-locked access controls.

Respecting Privacy: Decentralized AGI systems might leverage cryptographic techniques like zero-knowledge proofs to ensure data privacy, replacing a trust-based model with a mathematically-guaranteed one.

Improving Factual Accuracy: A decentralized model could potentially employ consensus protocols, allowing independent models to validate information for accuracy.

Continued Research and Engagement: A decentralized AGI could foster global, open-source collaboration and engagement, potentially producing diverse and innovative safety measures.

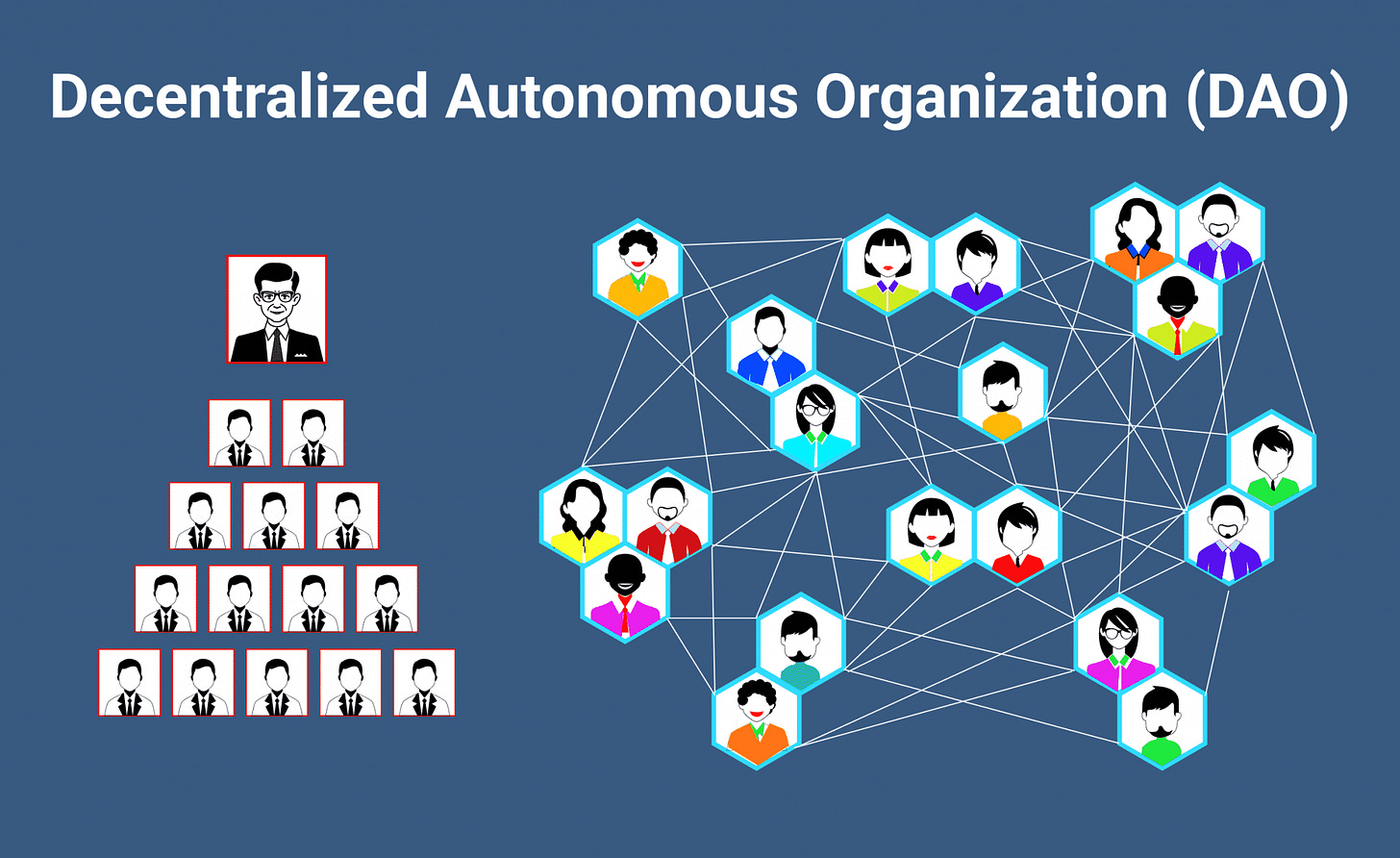

A decentralized future could democratize power and control over AGI, moving it from corporate boardrooms to the hands of a global community powered by DAOs and next-gen blockchains.

Moving Forward

Snowden and Goertzel's conversation impels us to reevaluate AI's influence on our lives, privacy, and surveillance. Two primary strategies arise from this discussion: transparency and decentralization. They advocate for a society where surveillance powers are shared with citizens, reintroducing the concept of sousveillance to equalize knowledge and power.

Additionally, Goertzel's decentralized AGI concept imagines an AI future that's collectively contributed to, providing personal data sovereignty and equitable AI use. However, these solutions aren't universally applicable, and we face substantial challenges from powerful entities like governments and corporations. Therefore, a proactive approach towards AI regulation becomes essential to curb misuse and enforce corporate responsibility.

AI advancement is a double-edged sword. It has the potential to revolutionize our lives, but also threatens our privacy and freedoms. We must remember Snowden's insight: "We're not born human, we become human." Similarly, the machines we're developing can become better, but we must guide them to serve humanity, not a select few.

The AI revolution is a societal challenge requiring us to redefine our relationships with technology, data, and privacy. We must actively shape this future, preserving our rights, freedom, and humanity.

Thanks for reading. If you enjoyed this article, feel free to share it. If you’d like my thorough notes from this talk at Consensus, reach out to me directly.

Talk soon,

Chris

"the government could serve as a steward, ensuring that AI benefits all of society, not just a select few."

It certainly could if it would come clean, give up it's power grab, and open up for transparent citizen-driven budgeting. Work In Progress :)